C#收集爬蟲代碼分享 C#簡略的爬取對象。本站提示廣大學習愛好者:(C#收集爬蟲代碼分享 C#簡略的爬取對象)文章只能為提供參考,不一定能成為您想要的結果。以下是C#收集爬蟲代碼分享 C#簡略的爬取對象正文

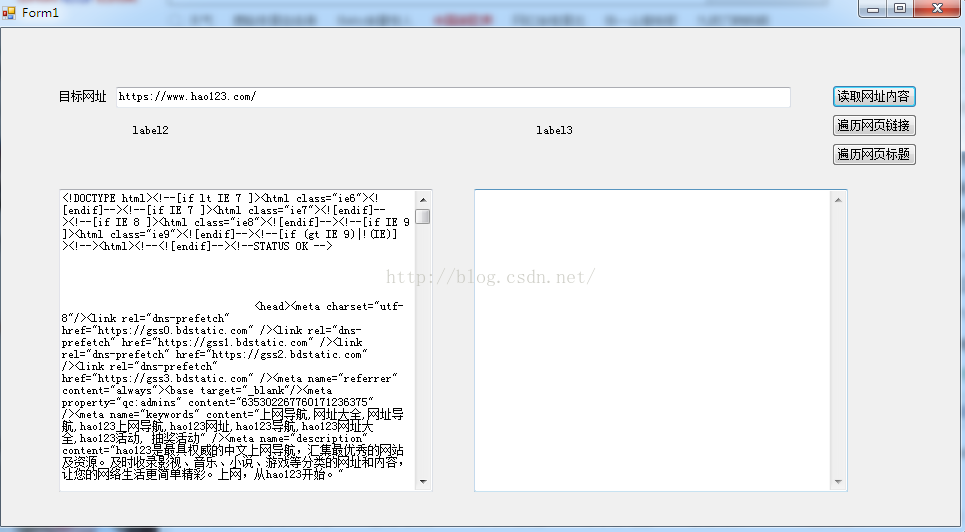

公司編纂妹子須要爬取網頁內容,叫我協助做了一簡略的爬取對象

這是爬取網頁內容,像是這對年夜家來講都是不可貴,然則在這裡有一些小修改,代碼獻上,年夜家參考

private string GetHttpWebRequest(string url)

{

HttpWebResponse result;

string strHTML = string.Empty;

try

{

Uri uri = new Uri(url);

WebRequest webReq = WebRequest.Create(uri);

WebResponse webRes = webReq.GetResponse();

HttpWebRequest myReq = (HttpWebRequest)webReq;

myReq.UserAgent = "User-Agent:Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; .NET CLR 1.0.3705";

myReq.Accept = "*/*";

myReq.KeepAlive = true;

myReq.Headers.Add("Accept-Language", "zh-cn,en-us;q=0.5");

result = (HttpWebResponse)myReq.GetResponse();

Stream receviceStream = result.GetResponseStream();

StreamReader readerOfStream = new StreamReader(receviceStream, System.Text.Encoding.GetEncoding("utf-8"));

strHTML = readerOfStream.ReadToEnd();

readerOfStream.Close();

receviceStream.Close();

result.Close();

}

catch

{

Uri uri = new Uri(url);

WebRequest webReq = WebRequest.Create(uri);

HttpWebRequest myReq = (HttpWebRequest)webReq;

myReq.UserAgent = "User-Agent:Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.2; .NET CLR 1.0.3705";

myReq.Accept = "*/*";

myReq.KeepAlive = true;

myReq.Headers.Add("Accept-Language", "zh-cn,en-us;q=0.5");

//result = (HttpWebResponse)myReq.GetResponse();

try

{

result = (HttpWebResponse)myReq.GetResponse();

}

catch (WebException ex)

{

result = (HttpWebResponse)ex.Response;

}

Stream receviceStream = result.GetResponseStream();

StreamReader readerOfStream = new StreamReader(receviceStream, System.Text.Encoding.GetEncoding("gb2312"));

strHTML = readerOfStream.ReadToEnd();

readerOfStream.Close();

receviceStream.Close();

result.Close();

}

return strHTML;

}

這是依據url爬取網頁遠嗎,有一些小修改,許多網頁有分歧的編碼格局,乃至有些網站做了反爬取的防備,這個辦法經由可以或許修改也能爬去

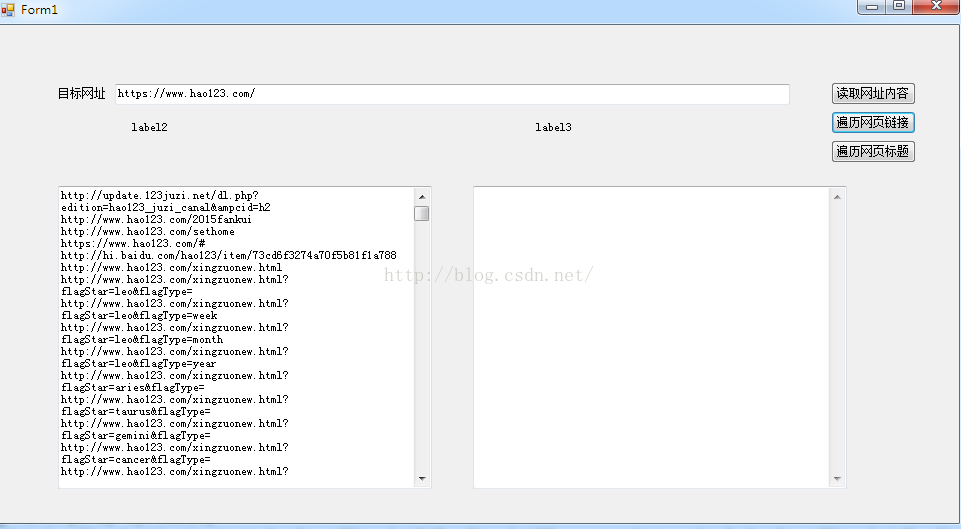

以下是爬取網頁一切的網址鏈接

/// <summary>

/// 提取HTML代碼中的網址

/// </summary>

/// <param name="htmlCode"></param>

/// <returns></returns>

private static List<string> GetHyperLinks(string htmlCode, string url)

{

ArrayList al = new ArrayList();

bool IsGenxin = false;

StringBuilder weburlSB = new StringBuilder();//SQL

StringBuilder linkSb = new StringBuilder();//展現數據

List<string> Weburllistzx = new List<string>();//新增

List<string> Weburllist = new List<string>();//舊的

string ProductionContent = htmlCode;

Regex reg = new Regex(@"http(s)?://([\w-]+\.)+[\w-]+/?");

string wangzhanyuming = reg.Match(url, 0).Value;

MatchCollection mc = Regex.Matches(ProductionContent.WordStr("href=\"/", "href=\"" + wangzhanyuming).WordStr("href='/", "href='" + wangzhanyuming).WordStr("href=/", "href=" + wangzhanyuming).WordStr("href=\"./", "href=\"" + wangzhanyuming), @"<[aA][^>]* href=[^>]*>", RegexOptions.Singleline);

int Index = 1;

foreach (Match m in mc)

{

MatchCollection mc1 = Regex.Matches(m.Value, @"[a-zA-z]+://[^\s]*", RegexOptions.Singleline);

if (mc1.Count > 0)

{

foreach (Match m1 in mc1)

{

string linkurlstr = string.Empty;

linkurlstr = m1.Value.WordStr("\"", "").WordStr("'", "").WordStr(">", "").WordStr(";", "");

weburlSB.Append("$-$");

weburlSB.Append(linkurlstr);

weburlSB.Append("$_$");

if (!Weburllist.Contains(linkurlstr) && !Weburllistzx.Contains(linkurlstr))

{

IsGenxin = true;

Weburllistzx.Add(linkurlstr);

linkSb.AppendFormat("{0}<br/>", linkurlstr);

}

}

}

else

{

if (m.Value.IndexOf("javascript") == -1)

{

string amstr = string.Empty;

string wangzhanxiangduilujin = string.Empty;

wangzhanxiangduilujin = url.Substring(0, url.LastIndexOf("/") + 1);

amstr = m.Value.WordStr("href=\"", "href=\"" + wangzhanxiangduilujin).WordStr("href='", "href='" + wangzhanxiangduilujin);

MatchCollection mc11 = Regex.Matches(amstr, @"[a-zA-z]+://[^\s]*", RegexOptions.Singleline);

foreach (Match m1 in mc11)

{

string linkurlstr = string.Empty;

linkurlstr = m1.Value.WordStr("\"", "").WordStr("'", "").WordStr(">", "").WordStr(";", "");

weburlSB.Append("$-$");

weburlSB.Append(linkurlstr);

weburlSB.Append("$_$");

if (!Weburllist.Contains(linkurlstr) && !Weburllistzx.Contains(linkurlstr))

{

IsGenxin = true;

Weburllistzx.Add(linkurlstr);

linkSb.AppendFormat("{0}<br/>", linkurlstr);

}

}

}

}

Index++;

}

return Weburllistzx;

}

這塊的技巧其實就是簡略的應用了正則去婚配!接上去獻上獲得題目,和存儲到xml文件的辦法

/// <summary>

/// // 把網址寫入xml文件

/// </summary>

/// <param name="strURL"></param>

/// <param name="alHyperLinks"></param>

private static void WriteToXml(string strURL, List<string> alHyperLinks)

{

XmlTextWriter writer = new XmlTextWriter(@"D:\HyperLinks.xml", Encoding.UTF8);

writer.Formatting = Formatting.Indented;

writer.WriteStartDocument(false);

writer.WriteDocType("HyperLinks", null, "urls.dtd", null);

writer.WriteComment("提取自" + strURL + "的超鏈接");

writer.WriteStartElement("HyperLinks");

writer.WriteStartElement("HyperLinks", null);

writer.WriteAttributeString("DateTime", DateTime.Now.ToString());

foreach (string str in alHyperLinks)

{

string title = GetDomain(str);

string body = str;

writer.WriteElementString(title, null, body);

}

writer.WriteEndElement();

writer.WriteEndElement();

writer.Flush();

writer.Close();

}

/// <summary>

/// 獲得網址的域名後綴

/// </summary>

/// <param name="strURL"></param>

/// <returns></returns>

private static string GetDomain(string strURL)

{

string retVal;

string strRegex = @"(\.com/|\.net/|\.cn/|\.org/|\.gov/)";

Regex r = new Regex(strRegex, RegexOptions.IgnoreCase);

Match m = r.Match(strURL);

retVal = m.ToString();

strRegex = @"\.|/$";

retVal = Regex.WordStr(retVal, strRegex, "").ToString();

if (retVal == "")

retVal = "other";

return retVal;

}

/// <summary>

/// 獲得題目

/// </summary>

/// <param name="html"></param>

/// <returns></returns>

private static string GetTitle(string html)

{

string titleFilter = @"<title>[\s\S]*?</title>";

string h1Filter = @"<h1.*?>.*?</h1>";

string clearFilter = @"<.*?>";

string title = "";

Match match = Regex.Match(html, titleFilter, RegexOptions.IgnoreCase);

if (match.Success)

{

title = Regex.WordStr(match.Groups[0].Value, clearFilter, "");

}

// 注釋的題目普通在h1中,比title中的題目更清潔

match = Regex.Match(html, h1Filter, RegexOptions.IgnoreCase);

if (match.Success)

{

string h1 = Regex.WordStr(match.Groups[0].Value, clearFilter, "");

if (!String.IsNullOrEmpty(h1) && title.StartsWith(h1))

{

title = h1;

}

}

return title;

}

這就是所用的全體辦法,照樣有許多須要改良的地方!年夜家假如有發明缺乏的地方還請指出,感謝!

以上就是本文的全體內容,願望對年夜家的進修有所贊助,也願望年夜家多多支撐。