loguru It's a third-party library , For logging . Easy to use , And the functions are relatively comprehensive .

GitHub - Delgan/loguru: Python logging made (stupidly) simple

loguru.logger — loguru documentation

pip install loguru

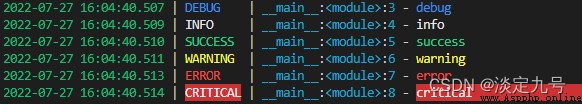

A simple test

from loguru import logger

logger.debug("debug")

logger.info("info")

logger.success("success")

logger.warning("warning")

logger.error("error")

logger.critical("critical")

Output results

This function applies to registering receivers , these Receiver Manage and use Record dictionary Up and down cultural Log message . The receiver can take many forms : Simple functions 、 String path 、 Objects similar to 、 Coroutine function or built-in handler .

Please note that : have access to remove() Function to delete the previously added handler .

Example :

import sys

from loguru import logger

logger.add("test.log", format="{time} {level} {message}", filter="", level="INFO", encoding="utf-8")

logger.debug("debug")

logger.info("info")

logger.success("success")

logger.warning("warning")

logger.error("error")

logger.critical("critical")

effect , Output log to test.log file

2022-07-27T17:14:36.393072+0800 INFO info

2022-07-27T17:14:36.394068+0800 SUCCESS success

2022-07-27T17:14:36.395066+0800 WARNING warning

2022-07-27T17:14:36.396063+0800 ERROR error

2022-07-27T17:14:36.397060+0800 CRITICAL critical

The official introduction :loguru.logger — loguru documentation

rotation: Indicates when the currently recorded file should be closed and a new file created , It can be the file size / Time of day / Time interval, etc .

logger.add("test1.log", rotation="500 MB") # The log file exceeds 500M Create a new file

logger.add("test2.log", rotation="12:00") # Every day at noon 12 Click to create a new log file

logger.add("test3.log", rotation="1 week") # There are currently more than log files in use 1 Zhou , Create a new log file

retension: Indicates that old files should be deleted if appropriate .

logger.add("test4.log", retention="10 days") # Log files that have been deleted for more than ten days

compression: The compressed or archived format that the log file should be converted to when it is closed .

logger.add("test5.log", compression="zip") # After the log file is closed , Use zip Compress

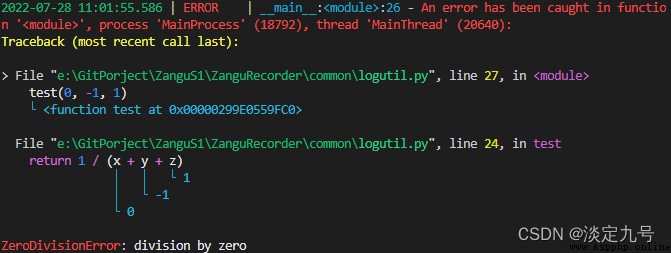

Use catch() Decorators or context managers can catch exceptions , Make sure that any errors can be recorded log In the log .

Decorator

from loguru import logger

@logger.catch

def test(x, y, z):

return 1 / (x + y + z)

test(0, 1, -1)

Context decorator

from loguru import logger

def test(x, y, z):

return 1 / (x + y + z)

with logger.catch():

test(0, 1, -1)

Output results

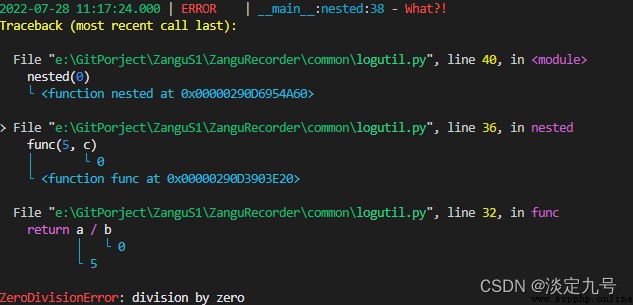

Recording exceptions that occur in code is important for tracing BUG It's very important ,loguru Allows the entire stack trace to be displayed ( Include variable values ) To help locate BUG reason .

logger.add("out.log", backtrace=True, diagnose=True) # Caution, may leak sensitive data in prod

def func(a, b):

return a / b

def nested(c):

try:

func(5, c)

except ZeroDivisionError:

logger.exception("What?!")

nested(0)

Output results

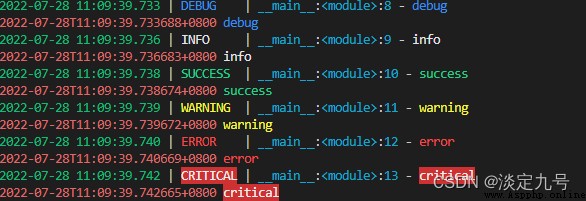

logger.add(sys.stdout, colorize=True, format="<red>{time}</red> <level>{message}</level>")

logger.debug("debug")

logger.info("info")

logger.success("success")

logger.warning("warning")

logger.error("error")

logger.critical("critical")

Output results

By default , Add to log All receivers of are thread safe , But they are not multi process safe . If you need to implement multi process security , Logging asynchronously , Need to add a enqueue Parameters :

logger.add("test.log", enqueue = True)

Convert the message to json String to facilitate transmission or parsing , have access to serialize To configure the :

logger.add("test.log", serialize=True, encoding="utf-8")

logger.debug("debug")

logger.info("info")

logger.success("success")

logger.warning("warning")

logger.error("error")

logger.critical("critical")

Output results

{"text": "2022-07-28 11:25:44.434 | DEBUG | __main__:<module>:43 - debug\n", "record": {"elapsed": {"repr": "0:00:00.127658", "seconds": 0.127658}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "", "name": "DEBUG", "no": 10}, "line": 43, "message": "debug", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.434074+08:00", "timestamp": 1658978744.434074}}}

{"text": "2022-07-28 11:25:44.436 | INFO | __main__:<module>:44 - info\n", "record": {"elapsed": {"repr": "0:00:00.129653", "seconds": 0.129653}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "️", "name": "INFO", "no": 20}, "line": 44, "message": "info", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.436069+08:00", "timestamp": 1658978744.436069}}}

{"text": "2022-07-28 11:25:44.438 | SUCCESS | __main__:<module>:45 - success\n", "record": {"elapsed": {"repr": "0:00:00.131648", "seconds": 0.131648}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "️", "name": "SUCCESS", "no": 25}, "line": 45, "message": "success", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.438064+08:00", "timestamp": 1658978744.438064}}}

{"text": "2022-07-28 11:25:44.439 | WARNING | __main__:<module>:46 - warning\n", "record": {"elapsed": {"repr": "0:00:00.132645", "seconds": 0.132645}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "️", "name": "WARNING", "no": 30}, "line": 46, "message": "warning", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.439061+08:00", "timestamp": 1658978744.439061}}}

{"text": "2022-07-28 11:25:44.440 | ERROR | __main__:<module>:47 - error\n", "record": {"elapsed": {"repr": "0:00:00.133642", "seconds": 0.133642}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "", "name": "ERROR", "no": 40}, "line": 47, "message": "error", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.440058+08:00", "timestamp": 1658978744.440058}}}

{"text": "2022-07-28 11:25:44.442 | CRITICAL | __main__:<module>:48 - critical\n", "record": {"elapsed": {"repr": "0:00:00.135637", "seconds": 0.135637}, "exception": null, "extra": {}, "file": {"name": "logutil.py", "path": "e:\\GitPorject\\ZanguS1\\ZanguRecorder\\common\\logutil.py"}, "function": "<module>", "level": {"icon": "️", "name": "CRITICAL", "no": 50}, "line": 48, "message": "critical", "module": "logutil", "name": "__main__", "process": {"id": 15768, "name": "MainProcess"}, "thread": {"id": 10228, "name": "MainThread"}, "time": {"repr": "2022-07-28 11:25:44.442053+08:00", "timestamp": 1658978744.442053}}}

Use bind() Add additional properties :

logger.add("test.log", format="{extra[ip]} {extra[user]} {message}")

context_logger = logger.bind(ip="192.168.0.1", user="someone")

context_logger.info("Contextualize your logger easily")

context_logger.bind(user="someone_else").info("Inline binding of extra attribute")

context_logger.info("Use kwargs to add context during formatting: {user}", user="anybody")

Output results

192.168.0.1 someone Contextualize your logger easily

192.168.0.1 someone_else Inline binding of extra attribute

192.168.0.1 anybody Use kwargs to add context during formatting: anybody

Use bind() and filter Filter the logs :

logger.add("test.log", filter=lambda record: "special" in record["extra"])

logger.debug("This message is not logged to the file")

logger.bind(special=True).info("This message, though, is logged to the file!")

Output results

2022-07-28 11:33:58.214 | INFO | __main__:<module>:58 - This message, though, is logged to the file!

Loguru All standard logging levels are included , Additional trace and success. If you need a custom level , have access to level() Function to create :

new_level = logger.level("test2", no=66, color="<black>", icon="")

logger.log("test2", "Here we go!")

Output results :

Date time processing

logger.add("test.log", format="{time:YYYY-MM-DD at HH:mm:ss} | {level} | {message}")

logger.debug("debug")

logger.info("info")

logger.success("success")

logger.warning("warning")

logger.error("error")

logger.critical("critical")

Output results :

2022-07-28 at 11:41:32 | DEBUG | debug

2022-07-28 at 11:41:32 | INFO | info

2022-07-28 at 11:41:32 | SUCCESS | success

2022-07-28 at 11:41:32 | WARNING | warning

2022-07-28 at 11:41:32 | ERROR | error

2022-07-28 at 11:41:32 | CRITICAL | critical

Using recorders in scripts is easy , You can start configure() it . To use from the Library Loguru, Remember never to call add(), But use disable(), So that the logging function becomes no-op. If developers want to view the log of the Library , He can again enable() it .

# For scripts

config = {

"handlers": [

{

"sink": sys.stdout, "format": "{time} - {message}"},

{

"sink": "test.log", "serialize": True},

],

"extra": {

"user": "someone"}

}

logger.configure(**config)

# For libraries

logger.disable("my_library")

logger.info("No matter added sinks, this message is not displayed")

logger.enable("my_library")

logger.info("This message however is propagated to the sinks")

Loguru And powerful email notification module notifiers Libraries are used in combination , To receive e-mail when the program fails unexpectedly , Or send many other types of notifications .

import notifiers

params = {

"username": "[email protected]",

"password": "abc123",

"to": "[email protected]"

}

# Send a single notification

notifier = notifiers.get_notifier("gmail")

notifier.notify(message="The application is running!", **params)

# Be alerted on each error message

from notifiers.logging import NotificationHandler

handler = NotificationHandler("gmail", defaults=params)

logger.add(handler, level="ERROR")

I hope I can help you , If you say something wrong , Welcome to correct .

]

]