At present, we are still optimizing the single objective algorithm , The purpose is to avoid the sudden drop of multi-objective effect caused by the limitations of single objective algorithm .

This code adopts Python Realization , Later will be transferred to Flink platform .

It's a solemn reminder : The copyright of this article belongs to me , No one is allowed to copy , Carry , Use with my consent !

[1] Liaoyanna , Limengjun , Zhangjingqi .K Mean clustering - Particle swarm optimization multi-objective localization algorithm [J]. Electronic Design Engineering ,2018,26(02):56-60.

Abstract : Aiming at the problem of multi-target location in complex image background , use K Mean clustering - Particle swarm optimization combined algorithm , Using the crowding out mechanism to determine the initial clustering centroid ; The target particle population is divided into several subgroups according to the eigenvector , Update the Particle Optimization in each target subgroup ; Repeat clustering and subgroup optimization , Until each target subgroup gets the optimal solution . The algorithm is applied to multi license plate location , Based on the actual collection of a large number of parking lot pictures , The particle model of license plate target is designed , Eigenvectors and fitness functions , The algorithm is Matlab Simulation . Experimental results show that :K Mean clustering - Particle swarm optimization (PSO) realizes the accurate and automatic positioning of multiple targets , The algorithm converges stably .

[2] Guo Cheng , Zhang Wanda , Wang Bo , Wang Jiafu . Particle swarm optimization algorithm based on multi swarm parallel cooperation [J]. Computer and modernization ,2022,(01):33-40.

Abstract : For high-dimensional complex optimization problems, it is easy to produce dimension disaster when solving, resulting in the problem that the algorithm is easy to fall into local optimization , This paper presents a method that can comprehensively consider the characteristics of high-dimensional complex optimization problems , Multi swarm parallel cooperative particle swarm optimization algorithm with dynamic adjustment of evolutionary strategy . The algorithm is based on the analysis of particle characteristics in the process of solving high-dimensional complex problems , Establish a fused ring topology 、 Network model of multi swarm parallel cooperation of particle swarm optimization algorithm with fully connected topology and von Neumann topology . The model combines 3 The advantages of a topological particle swarm optimization algorithm in solving high-dimensional complex optimization problems , A particle broadcasting system based on multi community is designed - Feedback dynamic evolution strategy and its evolutionary algorithm , Realize the dynamic adaptation of topology in high-dimensional complex optimization environment , The algorithm has strong search ability in solving high-dimensional unimodal function and multimodal function . The simulation results show that , The algorithm has good performance in the optimization accuracy and convergence speed of solving high-dimensional complex optimization problems .

[3] Rhodes , Zhou Yongquan , Huang huajuan , Wei xingqiong . Multi swarm particle swarm optimization algorithm [J]. Computer engineering and Application ,2010,46(19):51-54.

Abstract : Divide a particle swarm of a certain size into three subgroups , And according to the basic particle optimization algorithm 、ω Particle swarm optimization algorithm with self linear adjustment strategy and cloud adaptive particle swarm optimization algorithm have three different evolution rules , It not only maintains the independence and superiority of each subgroup and algorithm , Without increasing the complexity of the algorithm , And to put forward " Super social " part , Redefined the speed replacement formula , At the same time, the expansion mutation method and perturbation operation are introduced . The experimental simulation results show that , The global search ability of the algorithm is given 、 Convergence rate , The accuracy and stability have been significantly improved .

[4] Yangdaoping . Research on neighborhood topology of particle swarm optimization algorithm [J]. China's high-tech enterprises ,2009,(16):36-37.

Abstract : Particle swarm optimization (PSO Algorithm ) It is a heuristic global optimization technique .PSO The neighborhood topology of particle swarm optimization is a very important factor to determine the effect of particle swarm optimization algorithm , Particle swarm optimization algorithm with different neighborhood topology , The effect is very different . This paper analyzes the neighborhood topology and PSO The relationship between algorithms , The research status of neighborhood topology of particle swarm optimization algorithm is described , The possible research directions in the future are put forward .

Here I refer to four papers , So I put forward three optimization ideas here , Here we first implement two versions .

date :2022.6.20 DAY 1

First, the most basic... Is realized based on non matrix operation PSO Algorithm

Implement the simplest algorithm based on the third reference . This is actually PSO Realized , But I want to go to reinforcement learning optimization , So we have to do it alone python Of .

ok , Let's first look at today's first working point , The most basic of tradition is 1995 The algorithm proposed in .

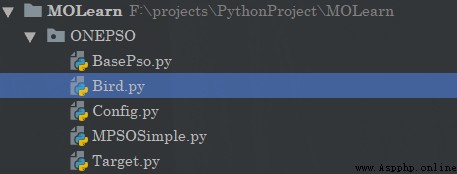

The overall project structure is as follows :

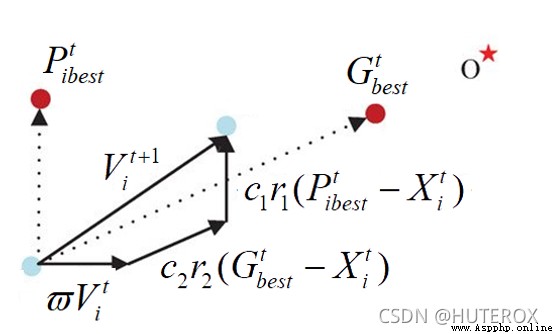

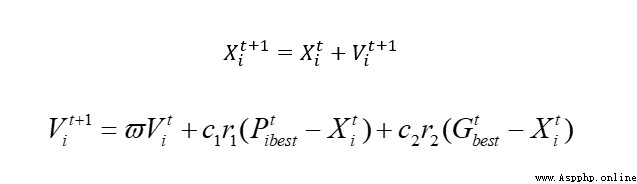

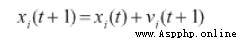

The basic core formula of the algorithm is as follows :

The first is configuration

This is mainly responsible for the following configurations , Then we use linear weights to unify .

# The setting of relevant parameters is completed through the configuration center

C1=2.0

C2=2.0

C3=2.0

W = 0.4

# Dimensions defined under a single objective

DIM = 2

X_down = -2.0

X_up = 2.0

V_min = -4.0

V_max = 4.0

# This parameter is used to optimize multiple groups later PSO Parameters of the algorithm

ClusterSize = 20

ClusterNumber = 3

# The size of the population

PopulationSize = 60

IterationsNumber = 1000

def LinearW(iterate):

# Number of incoming iterations

Wmax = 0.9

Wmin = 0.4

w = Wmax-(iterate*((Wmax-Wmin)/IterationsNumber))

return w

Then there is our objective optimization function , I also extracted the words here separately , Because I intend to optimize the single objective first , Then optimize the multi-objective , Then let's find some practical points to break through , Then the article came out , Although there are similar PlatEMO This platform , But as a sophomore in soft work , I still like to write my own wheels , And I have to go on the back Flink, The upper class processing engine is distributed ( So I don't need matrix in the following code , Utility class , Use objects to represent more information , Single machine has no concurrency , Multiple computers can be concurrent , If it's a matrix , Don't want to , so much trouble .

# This gadget is used to define functions that need to be optimized

class Target(object):

def SquareSum(self,X:list):

res = 0

for x in X:

res+=x*x

return res

This Bird That's what I do to reduce matrix operations

Although actually I use numpy It's going to be great , Direct matrix parallel operation , Then update it , But a single stream processing , It's hard to do , If we go to the back , Maybe I finished the matrix operation on a computer , If you look at Flink To handle the mechanism of data synchronization , Matrix is not easy to split , Then he came according to the slowest one , If you split , How much to dismantle , How to merge , How to handle the delay is a problem .

# Here we use definitions Class The way to achieve PSO, Easy to adjust later

from ONEPSO.Config import *

import random

class Bird(object):

ID = 0

# This is the population when it is divided into multiple groups ID

CID = 0

Iterate = 0

Y = None

X = None

# Record the optimal value of the individual , This is related to finding the global optimal value later

PbestY = None

PBestX = None

GBestX = None

GBestY = None

CBestY=None

CBestX=None

V = None

def __init__(self,ID):

self.ID = ID

self.V = [random.random() *(V_max-V_min) + V_min for _ in range(DIM)]

self.X = [random.random() *(X_up-X_down) + X_down for _ in range(DIM)]

def __str__(self):

return "ID:"+str(self.ID)+" -Fintess:%.2e:"%(self.Y)+" -X"+str(self.X)+" -PBestFitness:%.2e"%(self.PbestY)+" -PBestX:"+str(self.PBestX)+\

"\n -GBestFitness:%.2e"%(self.GBestY)+" -GBestX:"+str(self.GBestX)

And then the most basic implementation

# This is the most basic PSO Algorithm implementation , Used to test the rationality of the current algorithm architecture

# In addition, this gadget is also a tool class for optimization , The whole algorithm is to find the minimum value

from ONEPSO.Bird import Bird

from ONEPSO.Config import *

from ONEPSO.Target import Target

import random

import time

class BasePso(object):

Population = None

Random = random.random

target = Target()

W = W

def __init__(self):

# For convenience , Let's start with 1 Start

self.Population = [Bird(ID) for ID in range(1,PopulationSize+1)]

def ComputeV(self,bird:Bird):

# This method is used to calculate the velocity drop

NewV=[]

for i in range(DIM):

v = bird.V[i]*self.W + C1*self.Random()*(bird.PBestX[i]-bird.X[i])\

+C2*self.Random()*(bird.GBestX[i]-bird.X[i])

# Here, pay attention to judge whether it is beyond the scope

if(v>V_max):

v = V_max

elif(v<V_min):

v = V_min

NewV.append(v)

return NewV

def ComputeX(self,bird:Bird):

NewX = []

NewV = self.ComputeV(bird)

for i in range(DIM):

x = bird.X[i]+NewV[i]

if(x>X_up):

x = X_up

elif(x<X_down):

x = X_down

NewX.append(x)

return NewX

def InitPopulation(self):

# Initial population

GBestX = [0. for _ in range(DIM)]

Flag = float("inf")

for bird in self.Population:

bird.PBestX = bird.X

bird.Y = self.target.SquareSum(bird.X)

bird.PbestY = bird.Y

if(bird.Y<=Flag):

GBestX = bird.X

Flag = bird.Y

# After the convenience, we get the globally optimal population

for bird in self.Population:

bird.GBestX = GBestX

bird.GBestY = Flag

def Running(self):

# Here we begin to iterate

for iterate in range(1,IterationsNumber+1):

w = LinearW(iterate)

# This is a good one GBestX In fact, it is always the best thing in the next round

GBestX = [0. for _ in range(DIM)]

Flag = float("inf")

for bird in self.Population:

# Change to linear weight

self.W = w

x = self.ComputeX(bird)

y = self.target.SquareSum(x)

# Here we still need to be more detailed unconditionally , Otherwise, here C1 Is failure

# if(y<=bird.Y):

# bird.X = x

# bird.PBestX = x

bird.X = x

bird.Y = y

if(bird.Y<=bird.PbestY):

bird.PBestX=bird.X

bird.PbestY = bird.Y

# The best in an individual must contain the best that the whole world has experienced

if(bird.PbestY<=Flag):

GBestX = bird.PBestX

Flag = bird.PbestY

for bird in self.Population:

bird.GBestX = GBestX

bird.GBestY=Flag

if __name__ == '__main__':

start = time.time()

basePso = BasePso()

basePso.InitPopulation()

basePso.Running()

end = time.time()

for bird in basePso.Population:

print(bird)

print(" It takes a long time :",end-start)

The whole algorithm is very simple to write , It is also the easiest to understand in this way , The easiest to modify , Because I can change many things directly .

Then there are our many groups .

The first thing I have implemented here is the simplest one , Multi group ( In fact, I wrote this for testing , There are three more points to be achieved ), There are still a few papers to deal with tomorrow .

Article this thing , It's really fast to read and write code .

I suddenly found that it was really cool to mix in the school laboratory , Otherwise, it is still CURD, Still in the eight part essay .

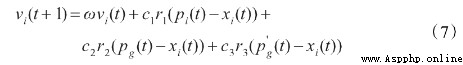

So this code is like this , Divided into three small groups , Now it's direct distribution , Three groups , Then update the equation to look like this

The last two points , One is how to divide the species group , One is about how this population interacts , In the first chapter, we mentioned the problems of different topology networks . The last one is how to optimize parameters , Because of this PSO, You will also find that this parameter is correct PSO The impact of , for example C1,2,3 Set up , This means which one you prefer later , Here I consider using reinforcement learning to optimize , Let's have a simple one first QL Try water .

# This algorithm is intended to BasePso On the basis of , Do a simple multi group optimization

from ONEPSO.BasePso import BasePso

from ONEPSO.Config import *

from ONEPSO.Bird import Bird

import time

class MPSOSimple(BasePso):

def __init__(self):

super(MPSOSimple,self).__init__()

self.Divide()

def Divide(self):

# Let's start with the simplest way , Hard classification

CID = 0

for bird in self.Population:

bird.CID=CID

if(bird.ID % ClusterSize==0):

if(CID<=ClusterNumber):

CID+=1

def ComputeV(self,bird:Bird):

# This method is used to calculate the velocity drop

NewV=[]

for i in range(DIM):

v = bird.V[i]*self.W + C1*self.Random()*(bird.PBestX[i]-bird.X[i])\

+C2*self.Random()*(bird.GBestX[i]-bird.X[i]) + C3*self.Random()*(bird.CBestX[i]-bird.X[i])

# Here, pay attention to judge whether it is beyond the scope

if(v>V_max):

v = V_max

elif(v<V_min):

v = V_min

NewV.append(v)

return NewV

def ComputeX(self,bird:Bird):

NewX = []

NewV = self.ComputeV(bird)

for i in range(DIM):

x = bird.X[i]+NewV[i]

if (x > X_up):

x = X_up

elif (x < X_down):

x = X_down

NewX.append(x)

return NewX

def InitPopulation(self):

# Initial population

# This is to record the global optimal solution

GBestX = [0. for _ in range(DIM)]

Flag = float("inf")

# There is also a record Cluster Optimal solution

CBest = {

}

CFlag = {

}

for i in range(ClusterNumber):

CFlag[i]=float("inf")

for bird in self.Population:

bird.PBestX = bird.X

bird.Y = self.target.SquareSum(bird.X)

bird.PbestY = bird.Y

bird.CBestX = bird.X

bird.CBestY = bird.Y

if(bird.Y<=Flag):

GBestX = bird.X

Flag = bird.Y

if(bird.Y<=CFlag.get(bird.CID)):

CBest[bird.CID]=bird.X

CFlag[bird.CID] = bird.Y

# After the convenience, we get the globally optimal population

for bird in self.Population:

bird.GBestX = GBestX

bird.GBestY = Flag

bird.CBestY=CFlag.get(bird.CID)

bird.CBestX=CBest.get(bird.CID)

def Running(self):

# Here we begin to iterate

for iterate in range(1,IterationsNumber+1):

w = LinearW(iterate)

# This is a good one GBestX In fact, it is always the best thing in the next round

GBestX = [0. for _ in range(DIM)]

Flag = float("inf")

CBest = {

}

CFlag = {

}

for i in range(ClusterNumber):

CFlag[i] = float("inf")

for bird in self.Population:

# Change to linear weight

self.W = w

x = self.ComputeX(bird)

y = self.target.SquareSum(x)

# Here we still need to be more detailed unconditionally , Otherwise, here C1 Is failure

# if(y<=bird.Y):

# bird.X = x

# bird.PBestX = x

bird.X = x

bird.Y = y

if(bird.Y<=bird.PbestY):

bird.PBestX=bird.X

bird.PbestY = bird.Y

# The best in an individual must contain the best that the whole world has experienced

if(bird.PbestY<=Flag):

GBestX = bird.PBestX

Flag = bird.PbestY

if (bird.Y <= CFlag.get(bird.CID)):

CBest[bird.CID] = bird.X

CFlag[bird.CID] = bird.Y

for bird in self.Population:

bird.GBestX = GBestX

bird.GBestY=Flag

bird.CBestY = CFlag.get(bird.CID)

bird.CBestX = CBest.get(bird.CID)

if __name__ == '__main__':

start = time.time()

mpso = MPSOSimple()

mpso.InitPopulation()

mpso.Running()

end = time.time()

for bird in mpso.Population:

print(bird)

print(" It takes a long time :",end-start)

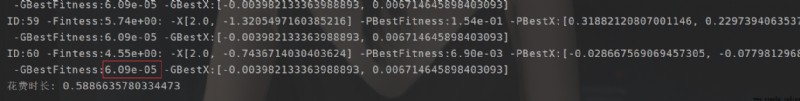

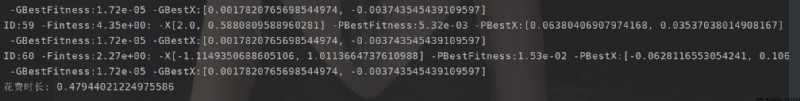

How to say this , The effect of the latter is relatively good .

The original BasePSo

My calculation is average