author | Luo Zhao Cheng

Coordinating editor | Tang Xiaoyin

This article was first published in CSDN WeChat (ID:CSDNnews)

《 multiple 4》 The 10th day of domestic release , A story began to circulate in the programmer's Jianghu , namely :

Marvel Universe , In fact, I just talked about one thing . The whole universe is like a project team . One group is called Captain America 、 Iron man 、 Captain marvel 、 Hok 、 Saul and others are maintaining this project , Conscientiously maintain the whole project .

One day , There is a talented programmer , Kill the bully . When he joined the company , He realized , This project is already very large , Just compile , Just a few hours . Running with heavy loads . And the server resources are very limited , The boss doesn't buy new machines for the budget , If we continue to develop like this , This project will appear sooner or later P0 accident . therefore , He decided to optimize the project in an all-round way , Use object-oriented thinking , Extract duplicate code , Business split , Algorithm optimization and other means , Thoroughly optimize , The goal is to reduce the amount of code 50%.

The project team led by Captain America is called the Avengers , After discovering the idea of mieba programmers , Stop and warn mieba : Don't change the old code easily !! It's easy to get out of bug Of , Just run the code !!—— The above comes from knowing @ Guoqijun

that , As a programmer writing movies , Why can't we use data to analyze , Audiences who like Marvel Universe are right 《 multiple 4》 The evaluation of ?

Friends in the industry , In film analysis , There are many data using cat's eyes . In this paper , The author also uses the interface of cat's eye to obtain data , Easy to handle , There is also a large amount of data .

Relevant interface , You can go to the website of cat's eye by yourself , You can also use the following address :

http://m.maoyan.com/mmdb/comments/movie/248172.json?_v_=yes&offset=20&startTime=2019-04-24%2002:56:46

stay Python in , Use Request It is convenient to send requests , Get the interface and return JSON data , Look at code :

def getMoveinfo(url):

session = requests.Session()

headers = {

"User-Agent": "Mozilla/5.0",

"Accept": "text/html,application/xhtml+xml",

"Cookie": "_lxsdk_cuid="

}

response = session.get(url, headers=headers)

if response.status_code == 200:

return response.text

return None

The request returns a JSON data , Get the original data of comments we want , And store the data in the database :

def saveItem(dbName, moveId, id, originalData) :

conn = sqlite3.connect(dbName)

conn.text_factory=str

cursor = conn.cursor()

ins="INSERT OR REPLACE INTO comments values (?,?,?)"

v = (id, originalData, moveId)

cursor.execute(ins,v)

cursor.close()

conn.commit()

conn.close()

After about two hours , Finally, I crawled from the cat's eye for about 9 Ten thousand data . The database file has exceeded 100M 了 .

Because the data captured on it , Directly store the original data , There is no data parsing . The interface contains a lot of data , There is user information 、 Comments, information, etc . This analysis , Only part of the data is used , Therefore, it is necessary to clean out the relevant data used :

def convert(dbName):

conn = sqlite3.connect(dbName)

conn.text_factory = str

cursor = conn.cursor()

cursor.execute("select * from comments")

data = cursor.fetchall()

for item in data:

commentItem = json.loads(item[1])

movieId = item[2]

insertItem(dbName, movieId, commentItem)

cursor.close()

conn.commit()

conn.close()

def insertItem(dbName, movieId, item):

conn = sqlite3.connect(dbName)

conn.text_factory = str

cursor = conn.cursor()

sql = ''' INSERT OR REPLACE INTO convertData values(?,?,?,?,?,?,?,?,?) '''

values = (

getValue(item, "id"),

movieId,

getValue(item, "userId"),

getValue(item, "nickName"),

getValue(item, "score"),

getValue(item, "content"),

getValue(item, "cityName"),

getValue(item, "vipType"),

getValue(item, "startTime"))

cursor.execute(sql, values)

cursor.close()

conn.commit()

conn.close()

adopt JSON The library parses the original data , Store the information we need in a new data table .

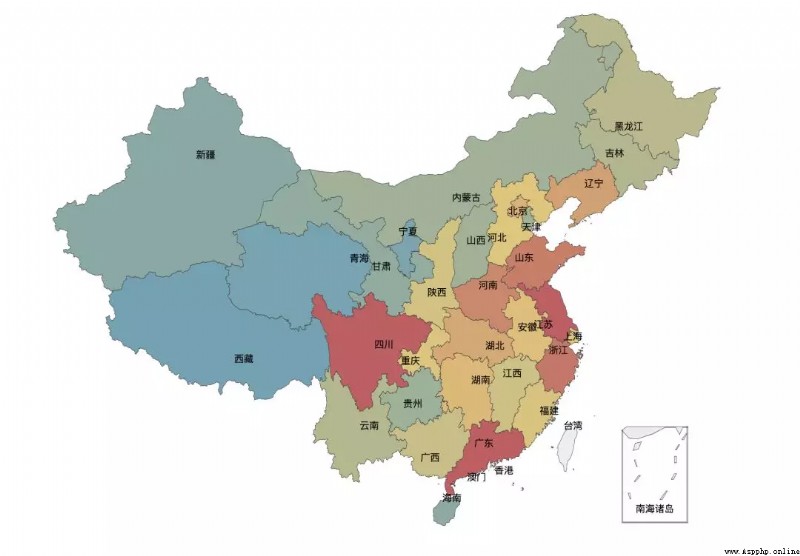

Because no platform can get the ticket purchase data of users , We can only learn from the data of comments , the child is father of the man , From these data , Analyze some trends . In the comment data , We can see the city where the commenter is located . Parse the location of the data , Divided into corresponding administrative provinces , You can see the number of comments in each province , See the picture below ( The redder the color , The more user comments ):

As you can see from the diagram , Shanghai 、 Guangzhou 、 The number of users in Sichuan is obviously much higher than that in other cities . Take a look at the code :

data = pd.read_sql("select * from convertData", conn)

city = data.groupby(['cityName'])

city_com = city['score'].agg(['mean','count'])

city_com.reset_index(inplace=True)

fo = open("citys.json",'r')

citys_info = fo.readlines()

citysJson = json.loads(str(citys_info[0]))

print city_com

data_map_all = [(getRealName(city_com['cityName'][i], citysJson),city_com['count'][i]) for i in range(0,city_com.shape[0])]

data_map_list = {

}

for item in data_map_all:

if data_map_list.has_key(item[0]):

value = data_map_list[item[0]]

value += item[1]

data_map_list[item[0]] = value

else:

data_map_list[item[0]] = item[1]

data_map = [(realKeys(key), data_map_list[key] ) for key in data_map_list.keys()]

Marvel films have always been highly rated films loved by Chinese friends . Douban score 8.7 branch , Among our commenting users , What kind of trend is it ? See the picture below :

As you can see from the diagram , review 5 The number of scores is much higher than other scores , It can be seen that Chinese audience friends really like Marvel's science fiction movies .

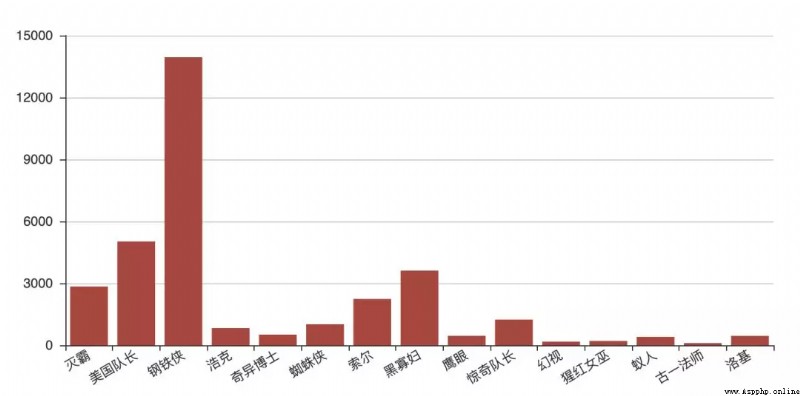

Reconnection from 1 The beginning is the gathering of superheroes in Marvel Universe , To the present day 4 Ministry , It is also the gathering of all heroes . that , In this , Which hero is more popular with the audience ? Look at the code first :

attr = [" Thanos "," Captain America ",

" Iron man ", " Hok ", " Dr. strange ", " spider-man ", " Saul " ," Black widow ",

" Eagle eye ", " Captain marvel ", " Hallucination ",

" Scarlet Witch "," Ant man ", " Master Gu "]

alias = {

" Thanos ": [" Thanos ", "Thanos"],

" Captain America ": [" Captain America ", " American team "],

" Hok ": [" Hok ", " The Incredible Hulk ", " Banner ", "HULK"],

" Dr. strange ": [" Dr. strange ", " Doctor "],

" Iron man ": [" Iron man ", "stark", " Stark ", " Tony ", " Stark "],

" spider-man ": [" spider-man "," spider "," Peter ", " Dutch brother "],

" Saul ":[" Saul ", " The thor "],

" Black widow ": [" Black widow ", " My sister "],

" Eagle eye ":[" Eagle eye "," Clinton "," Barton "," Clint "],

" Captain marvel ":[" Captain marvel "," carol ", " Be surprised "],

" Nebula ":[" Nebula "],

" Scarlet Witch ": [" Scarlet Witch ", " Scarlet Witch ", " Wanda "],

" Ant man ":[" Ant man ", " Ant man ", "Ant", "AntMan"],

" Master Gu ": [" Gu Yi ", " Master Gu ", " Master "]

}

v1 = [getCommentCount(getAlias(alias, attr[i])) for i in range(0, len(attr))]

bar = Bar("Hiro")

bar.add("count",attr,v1,is_stack=True,xaxis_rotate=30,yaxix_min=4.2,

xaxis_interval=0,is_splitline_show=True)

bar.render("html/hiro_count.html")

The operation results are as follows , You can see that iron man is well deserved C position , Not only are movies in movies , It's still well deserved in the comment area C position , Even far better than the American team 、 My sister and Thor :

From the above audience distribution and rating data, we can see , This is a play , Audience friends still like it very much . front , Get the audience's comment data from cat's eye . Now? , The author will pass Jieba Break the comment into words , And then through Wordcloud Make word cloud , Let's see , Audience friends, yes 《 multiple 》 Overall evaluation :

You can see , Mieba and iron man appear more frequently than other heroes . Does this mean , The protagonists of this play are the two of them ?

Careful friends should find out , Iron man 、 The number of mieba is inconsistent in the number of word clouds and comments . The reason lies in , The number of comments is counted according to the number of comments , And in the word cloud , It's word frequency , In the same comment , There will be statistics for many times . therefore , Mieba appears more often than iron man .

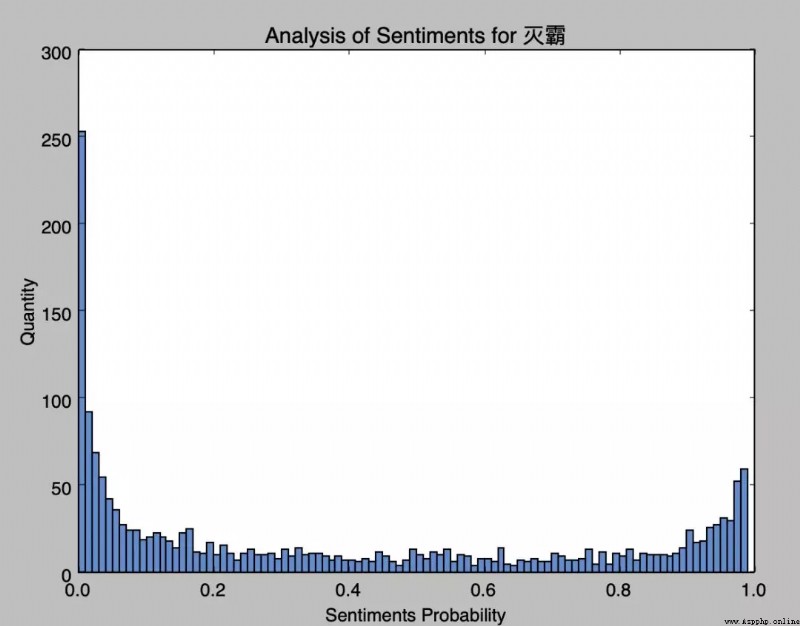

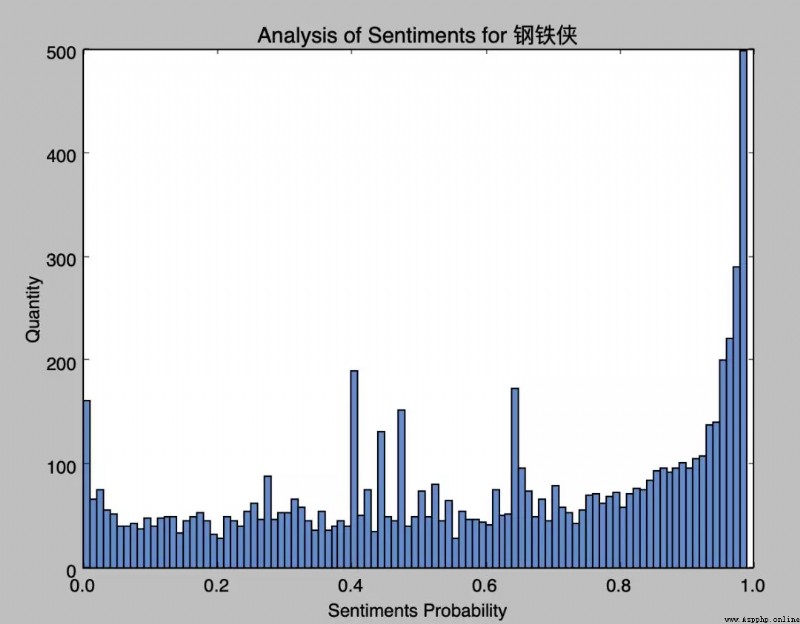

Last , Let's analyze the emotional analysis of iron man and mieba , On the first code :

def emotionParser(name):

conn = conn = sqlite3.connect("end.db")

conn.text_factory = str

cursor = conn.cursor()

likeStr = "like \"%" + name + "%\""

cursor.execute("select content from convertData where content " + likeStr)

values = cursor.fetchall()

sentimentslist = []

for item in values:

sentimentslist.append(SnowNLP(item[0].decode("utf-8")).sentiments)

plt.hist(sentimentslist, bins=np.arange(0, 1, 0.01), facecolor="#4F8CD6")

plt.xlabel("Sentiments Probability")

plt.ylabel("Quantity")

plt.title("Analysis of Sentiments for " + name)

plt.show()

cursor.close()

conn.close()

here , Use SnowNLP To do emotional analysis .

Sentiment analysis , Also known as opinion mining 、 Tendentiousness analysis, etc . Briefly , It is to analyze the subjective text with emotional color 、 Handle 、 The process of induction and reasoning .

As you can see from the picture , The positive emotion of iron man is higher than that of mieba , Villains are easily resisted .

Last , from 《 Galaxy escort 》 The mieba that came through the period became powder and dissipated in the last minute , This also gives us programmers an alarm :

Refactoring code , Improving design , Reduce system complexity , It's good to do this . however , We must ensure the stable operation of the system , Leave no potential safety hazards , Otherwise , Sooner or later, I will lose my job .