本文基於Keras框架和維基百科中文預訓練詞向量Word2vec模型,分別實現由GRU、LSTM、RNN神經網絡組成的詞性標注模型,並且將模型封裝,使用python Django web框架搭建網站,使用戶通過網頁界面實現詞性標注模型的使用與生成。

詞性標注(Part-Of-Speech tagging, POS tagging)也被稱為語法標注(grammatical tagging)或詞類消疑(word-category disambiguation),是語料庫語言學(corpus linguistics)中將語料庫內單詞的詞性按其含義和上下文內容進行標記的文本數據處理技術

Word2vec,是一群用來產生詞向量的相關模型。這些模型為淺而雙層的神經網絡,用來訓練以重新建構語言學的詞文本。

循環神經網絡(Recurrent Neural Network, RNN)是一類以序列(sequence)數據為輸入,在序列的演進方向進行遞歸(recursion)且所有節點(循環單元)按鏈式連接的遞歸神經網絡(recursive neural network)

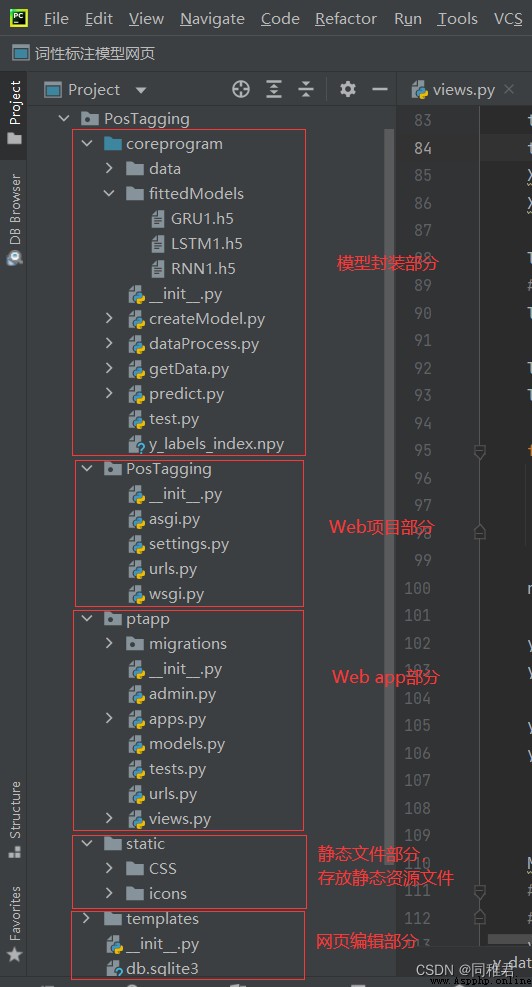

本文采用python面向對象編程思想,對詞性標注模型的構建、訓練、預測以及數據的處理分別進行封裝,采用python Django Web框架,時模型能夠在網頁端被創建與使用。

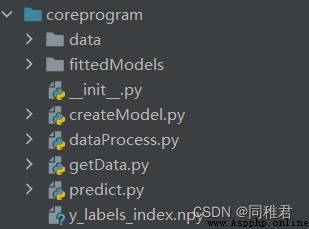

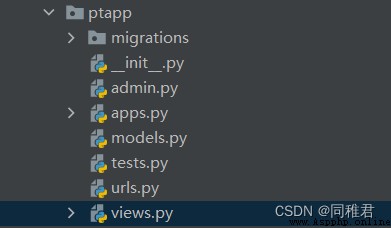

後端代碼目錄如下圖:

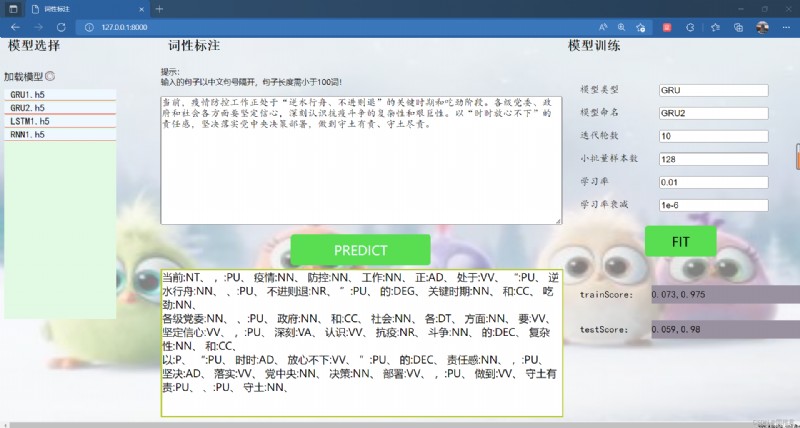

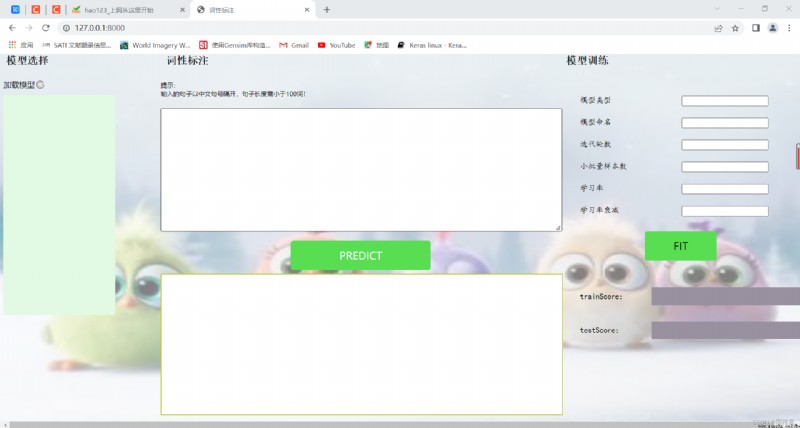

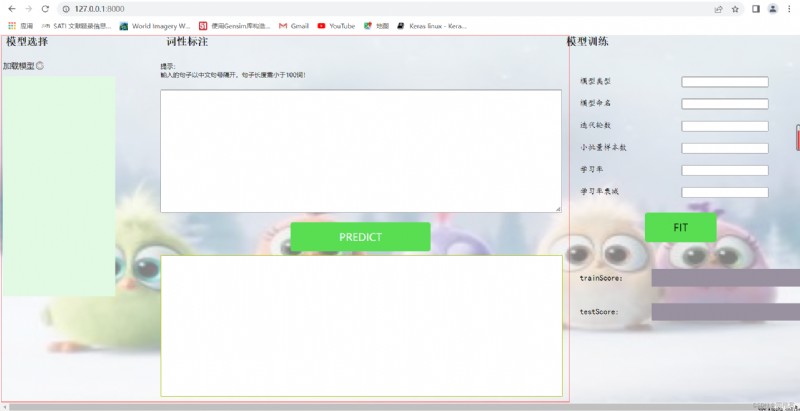

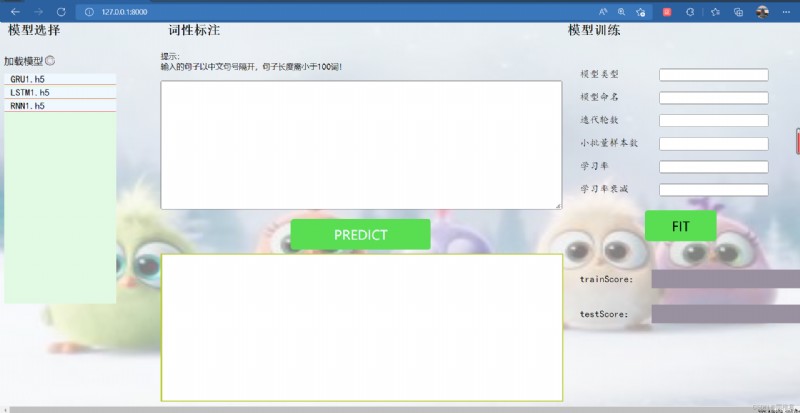

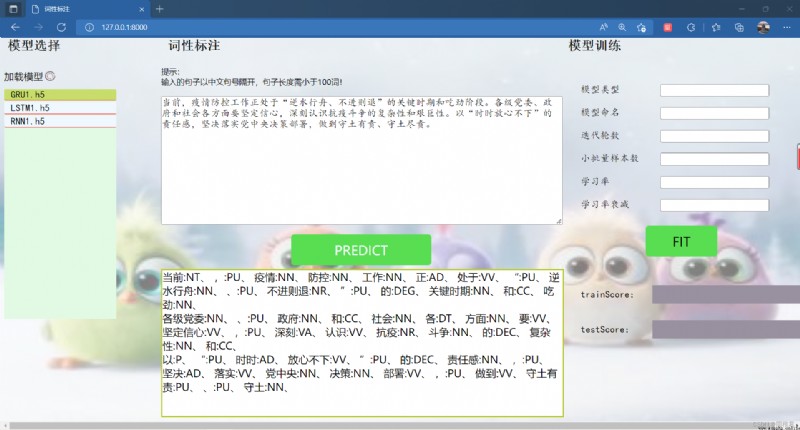

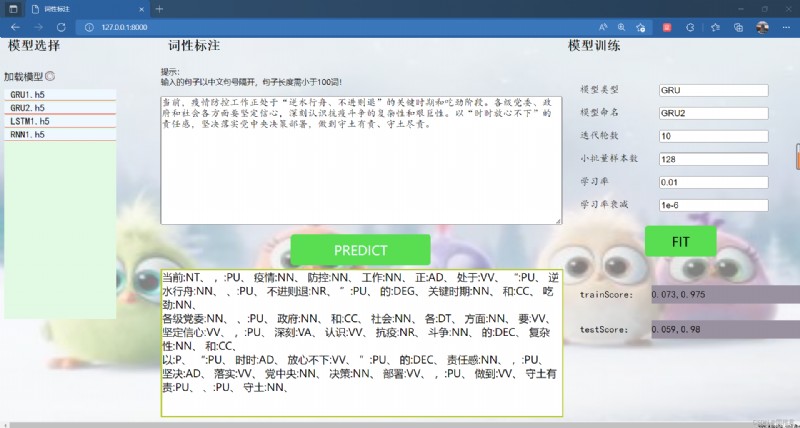

網頁效果圖如下:

模型代碼部分展示:

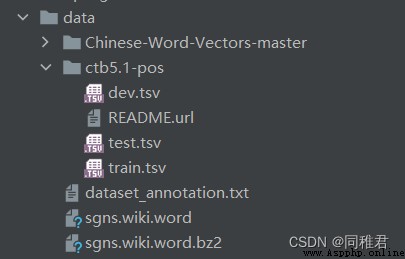

data文件夾下存放用於模型訓練的原始數據,以及預訓練好的維基百科中文詞向量模型sgns.wiki.word。

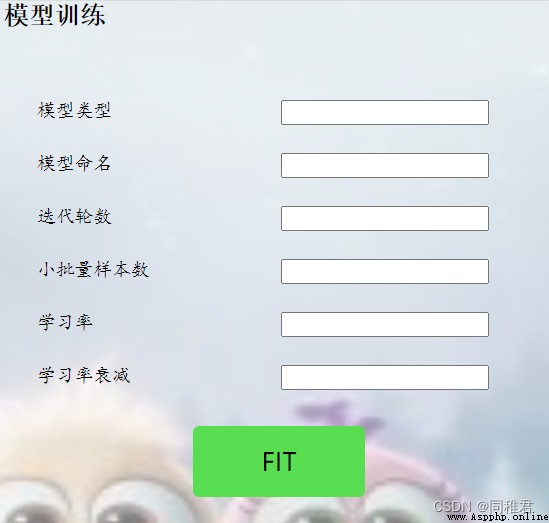

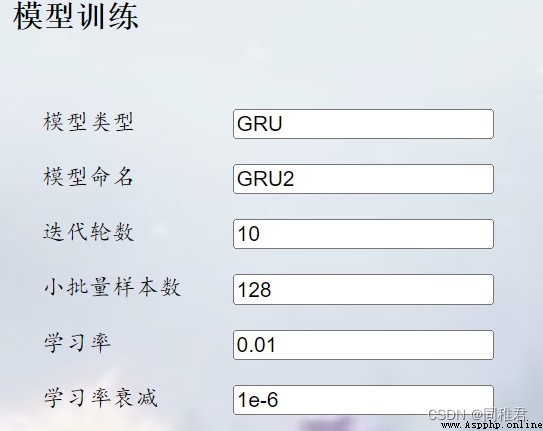

fittedModels文件夾下保存的是根據用戶在網頁端輸入的參數而創建的訓練好的詞性標注模型。用戶指定輸入的參數如下圖。

getData.py 用於讀取原始訓練數據和測試數據,並接收用戶輸入的句子,將用戶輸入的句子使用jieba進行分詞,以便用於預測。內部代碼如下:

# 獲取用於測試以及檢測的數據集

def get_dataSet(file_path):

data = pd.read_csv(file_path, sep='\t', skip_blank_lines=False, header=None)

# 取出文本部分

content = data[0]

# 取出標簽部分

label = data[1]

return content, label

# 對用戶的輸入進行分詞,輸出的格式與get_dataSet() 中的 content 相同

def get_userInputData(userInput):

seg_list = jieba.cut(userInput, cut_all=False)

seg_list = list(seg_list)

for i in range(len(seg_list)):

if seg_list[i] == "。":

seg_list[i] = nan

content = pd.DataFrame(data=seg_list)

content = content[0]

return contentdataProcess.py 用於將getData.py得到的數據進行數據變換,變換能夠用於訓練和預測的數據,即生成X_train_tokenized、X_tset_tokenized、X_data_tokenized(由用戶輸入的句子轉換而來)、y_train_index_paddad、y_test_index_paddad。內部代碼如下:

import gensim

from numpy import nan

from tensorflow.keras.preprocessing import sequence

from getData import get_dataSet, get_userInputData

import numpy as np

# 按句對X、y進行拆分

def split_corpus_by_sentence(content):

cleaned_sentence = []

split_label = content.isnull()

last_split_index = 0

index = 0

while index < len(content):

current_word = content[index]

if split_label[index] == True and len(cleaned_sentence) == 0:

cleaned_sentence.append(np.array(content[last_split_index:index]))

last_split_index = index + 1

index += 1

elif split_label[index] == True and len(cleaned_sentence) > 0:

cleaned_sentence.append(np.array(content[last_split_index:index]))

last_split_index = index + 1

index += 1

else:

index += 1

return cleaned_sentence

# --------------------------------------------------------------------------------------------------------

# 序號化 文本,tokenizer句子,並返回每個句子所對應的詞語索引

# 由於將詞語轉化為索引的word_index需要與詞向量模型對齊,故在導入詞向量模型後再將X進行處理

def tokenizer(texts, word_index):

MAX_SEQUENCE_LENGTH = 100

data = []

for sentence in texts:

new_sentence = []

for word in sentence:

try:

new_sentence.append(word_index[word]) # 把文本中的 詞語轉化為index

except:

new_sentence.append(0)

data.append(new_sentence)

# 使用kears的內置函數padding對齊句子,好處是輸出numpy數組,不用自己轉化了

data = sequence.pad_sequences(data, maxlen=MAX_SEQUENCE_LENGTH, padding='post', truncating='post')

return data

def getWord2vec():

myPath = 'coreprogram/data/sgns.wiki.word' # 本地詞向量的地址

Word2VecModel = gensim.models.KeyedVectors.load_word2vec_format(myPath) # 讀取詞向量,以二進制讀取

word_index = {" ": 0} # 初始化 `{word : token}` ,後期 tokenize 語料庫就是用該詞典。

word_vector = {} # 初始化`{word : vector}`字典

embeddings_matrix = np.zeros((len(Word2VecModel.index_to_key) + 1, Word2VecModel.vector_size))

for i in range(len(Word2VecModel.index_to_key)):

word = Word2VecModel.index_to_key[i] # 提取每個詞語

word_index[word] = i + 1 # {“詞語”:序號, ***} 序號從1開始,

word_vector[word] = Word2VecModel[word] # {"詞語":[詞向量], ***}

embeddings_matrix[i + 1] = Word2VecModel[word] # 詞向量矩陣, 從第1行到最後為各個詞的詞向量,首行為全0的向量

return word_index, word_vector, embeddings_matrix

def X_dataProcess(X_data):

X_data_sent_split = split_corpus_by_sentence(X_data)

word_index, word_vector, embeddings_matrix = getWord2vec()

X_data_tokenized = tokenizer(X_data_sent_split, word_index)

# print(X_data_tokenized[0])

return X_data_tokenized

# ---------------------------------------------------------------------------------------------------------------

def transfer_label_category_index(origin_labels, labels_types):

transfered_label = []

for sentence_labels in origin_labels:

labels_format_index = [labels_types.index(label) for label in sentence_labels] # 將標簽依據字典轉化為序號

transfered_label.append(labels_format_index)

return transfered_label

def y_dataProcess():

train_data_url = 'coreprogram/data/ctb5.1-pos/train.tsv'

test_data_url = 'coreprogram/data/ctb5.1-pos/test.tsv'

X_train, y_train = get_dataSet(train_data_url)

X_test, y_test = get_dataSet(test_data_url)

labels = y_train.tolist() + y_test.tolist()

# labels_types = list(set(labels)) # 使用set會導致每次訓練的元素排序不同,從而在預測時無法按照索引找到對應的詞性

labels_types = [nan, 'AD', 'CC', 'IJ', 'NP', 'ETC', 'DEG', 'VE', 'VV', 'CD', 'VA', 'SB', 'LC', 'NR', 'CS', 'DER', 'PU', 'BA', 'X', 'M', 'OD', 'MSP', 'LB', 'DEC', 'DEV', 'NN', 'P', 'FW', 'DT', 'PN', 'VC', 'SP', 'VP', 'NT', 'AS', 'JJ']

labels_dict = {}

labels_index = {"padded_label": 0}

for index in range(len(labels_types)):

label = labels_types[index]

labels_dict.update({label: labels.count(label)})

labels_index.update({label: index + 1})

np.save('y_labels_index.npy', labels_index)

y_train_sent_split = split_corpus_by_sentence(y_train)

y_test_sent_split = split_corpus_by_sentence(y_test)

y_train_index = transfer_label_category_index(y_train_sent_split, labels_types)

y_test_index = transfer_label_category_index(y_test_sent_split, labels_types)

MAX_SEQUENCE_LENGTH = 100

# 標簽格式轉化

# 構建對應(標簽樣本數,句子長度,標簽類別數)形狀的張量,值全為0

y_train_index_padded = np.zeros((len(y_train_index), MAX_SEQUENCE_LENGTH, len(labels_types) + 1), dtype='float',

order='C')

y_test_index_padded = np.zeros((len(y_test_index), MAX_SEQUENCE_LENGTH, len(labels_types) + 1), dtype='float',

order='C')

# 填充張量

for sentence_labels_index in range(len(y_train_index)):

for label_index in range(len(y_train_index[sentence_labels_index])):

if label_index < MAX_SEQUENCE_LENGTH:

y_train_index_padded[

sentence_labels_index, label_index, y_train_index[sentence_labels_index][label_index] + 1] = 1

if len(y_train_index[sentence_labels_index]) < MAX_SEQUENCE_LENGTH:

for label_index in range(len(y_train_index[sentence_labels_index]), MAX_SEQUENCE_LENGTH):

y_train_index_padded[sentence_labels_index, label_index, 0] = 1

# 優化:若為填充的標簽,則將其預測為第一位為1

for sentence_labels_index in range(len(y_test_index)):

for label_index in range(len(y_test_index[sentence_labels_index])):

if label_index < MAX_SEQUENCE_LENGTH:

y_test_index_padded[

sentence_labels_index, label_index, y_test_index[sentence_labels_index][label_index] + 1] = 1

if len(y_test_index[sentence_labels_index]) < MAX_SEQUENCE_LENGTH:

for label_index in range(len(y_test_index[sentence_labels_index]), MAX_SEQUENCE_LENGTH):

y_test_index_padded[sentence_labels_index, label_index, 0] = 1

return y_train_index_padded, y_test_index_padded, labels_types

createModel.py用於模型的創建於訓練,模型創建與訓練所需要的參數如模型類型、模型保存名、迭代輪數、學習率、學習率衰減等均可由用戶自定義。模型訓練會返回在訓練集以及測試集的評估結果(交叉熵損失值、准確率),並最終顯示在網頁上。createModel.py內部代碼如下:

此處代碼不完整,完整代碼請在文末連接處獲取

# 此處代碼不完整,完整代碼請在文末連接處獲取

import tensorflow as tf

from keras.models import Sequential

from keras.layers import Embedding, Dense, Dropout, GRU, LSTM, SimpleRNN

import keras

from keras import optimizers

from dataProcess import getWord2vec, y_dataProcess, X_dataProcess

from getData import get_dataSet

EMBEDDING_DIM = 300 #詞向量維度

MAX_SEQUENCE_LENGTH = 100

def createModel(modelType, saveName,epochs=10, batch_size=128,lr=0.01, decay=1e-6):

train_data_url = 'coreprogram/data/ctb5.1-pos/train.tsv'

test_data_url = 'coreprogram/data/ctb5.1-pos/test.tsv'

X_train, y_train = get_dataSet(train_data_url)

X_test, y_test = get_dataSet(test_data_url)

X_train_tokenized = X_dataProcess(X_train)

X_test_tokenized = X_dataProcess(X_test)

y_train_index_padded, y_test_index_padded, labels_types = y_dataProcess()

word_index, word_vector, embeddings_matrix = getWord2vec()

model = Sequential()

if modelType=="LSTM":

model.add(LSTM(128, input_shape=(MAX_SEQUENCE_LENGTH, EMBEDDING_DIM), activation='tanh', return_sequences=True))

elif modelType=="RNN":

model.add(SimpleRNN(128, input_shape=(MAX_SEQUENCE_LENGTH, EMBEDDING_DIM), activation='tanh', return_sequences=True))

elif modelType=="GRU":

model.add(GRU(128, input_shape=(MAX_SEQUENCE_LENGTH, EMBEDDING_DIM), activation='tanh', return_sequences=True))

print(testScore)

model.save("coreprogram/fittedModels/"+ saveName +".h5")

return trainScore, testScore

控制前後端數據交互的關鍵代碼在views.py中,前端通過Ajax發送GET請求向後端申請模型數據、詞性標注結果、模型訓練好後的評估數據等。views.py中的代碼如下:

from django.shortcuts import render

# Create your views here.

import json

import os

from django.http import HttpResponse

from django.shortcuts import render

import predict

import createModel

def Home(request):

global result

if request.method == 'GET':

name = request.GET.get('name')

if name == 'getModelsName':

filesList = None

for root, dirs, files in os.walk("coreprogram/fittedModels"):

filesList = files

result = {

"modelsNameList": filesList

}

if name == 'predict':

chosenModelName = request.GET.get('chosenModelName')

inputSentence = request.GET.get('inputSentence')

modelUrl = "coreprogram/fittedModels/" + chosenModelName

posTaggingresult = predict.finalPredict(modelUrl, inputSentence)

print(posTaggingresult)

result = {

"posTaggingresult": posTaggingresult

}

if name == "fit":

modetype = request.GET.get('modetype')

saveName = request.GET.get('saveName')

epochs = request.GET.get('epochs')

batch_size = request.GET.get('batch_size')

lr = request.GET.get('lr')

decay = request.GET.get('decay')

trainScore, testScore = createModel.createModel(modetype, saveName, epochs=int(epochs), batch_size=int(batch_size), lr=float(lr), decay=float(decay))

trainScore = [round(i,3) for i in trainScore]

testScore = [round(i,3) for i in testScore]

result = {

"trainScore": trainScore,

"testScore":testScore,

}

return render(request, "ptapp/HomePage.html")

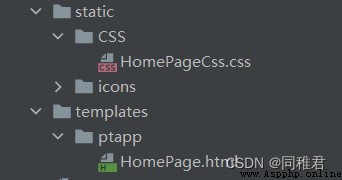

static文件夾中保存網頁中需要用到圖標、css頁面渲染文件等,templates中保存編寫的網頁html文件。HomePage.html中的代碼如下:

<!DOCTYPE html>

<html lang="zh-CN">

<head>

<meta charset="UTF-8">

<title>詞性標注</title>

<link rel="stylesheet" type="text/css" href="../../static/CSS/HomePageCss.css">

<script src="https://apps.bdimg.com/libs/jquery/2.1.4/jquery.min.js"></script>

</head>

<body>

<div class="background"></div>

<div class="part1">

<div class="title">模型選擇</div>

<div id="refresh">加載模型<img src="../../static/icons/刷新.png"></img></div>

<div class="modelsList">

<ul id="modelsList_ul"></ul>

</div>

</div>

<div class="part2">

<div class="title">詞性標注</div>

<div class="input">

<div>提示:<br/>輸入的句子以中文句號隔開,句子長度需小於100詞!</div>

<textarea name="inputSentenceTextarea" id="inputSentence" cols="30" rows="10"></textarea>

</div>

<div class="predict">

<div id="predictButton">PREDICT</div>

</div>

<div id="output" class="output"></div>

</div>

<div class="part3">

<div class="title">模型訓練</div>

<div class="empty"></div>

<div class="paramenter">模型類型

<input type="text" id="modetype">

</div>

<div class="paramenter">模型命名

<input type="text" id="saveName">

</div>

<div class="paramenter">迭代輪數

<input type="text" id="epochs">

</div>

<div class="paramenter">小批量樣本數

<input type="text" id="batch_size">

</div>

<div class="paramenter">學習率

<input type="text" id="lr">

</div>

<div class="paramenter">學習率衰減

<input type="text" id="decay">

</div>

<div class="fit">

<div id="fitbutton">FIT</div>

</div>

<div class="fitresult">

<div class="score">trainScore:<div id="trainScoreResult"></div></div>

<div class="score">testScore:<div id="testScoreResult"></div></div>

</div>

</div>

</body>

<script>

/* 初始化選擇的模型的名稱 */

var chosenModelName=""

document.getElementById("refresh").onclick = function() {

var data = {'name': 'getModelsName'}

$.ajax({

url: 'ptapp/Home',

type: "GET",

data: data,

success: function (arg) {

var modelsNameList = arg["modelsNameList"]

var ulInnerHtml =""

for(var i=0; i<modelsNameList.length; i++){

ulInnerHtml =ulInnerHtml + "<li class='li_unselected'>" + modelsNameList[i] + "</li>"

}

document.getElementById("modelsList_ul").innerHTML = ulInnerHtml

var modelliList = document.getElementById("modelsList_ul").getElementsByTagName("li")

var modelliList = document.getElementById("modelsList_ul").getElementsByTagName("li")

console.log("modelliList.length",modelliList.length)

for(var i = 0; i < modelliList.length; i++){

modelliList[i].onclick = function(){

var modelName = this.innerText

chosenModelName = modelName

console.log(chosenModelName)

var className = this.className

for(var j = 0; j < modelliList.length; j++){

modelliList[j].className = "li_unselected"

}

this.className = "li_selected"

}

}

}

})

}

document.getElementById("predictButton").onclick = function(){

var inputSentence = document.getElementById("inputSentence").value

if(inputSentence){

if(chosenModelName){

var data = {'name': 'predict', "chosenModelName": chosenModelName, "inputSentence": inputSentence}

$.ajax({

url: 'ptapp/Home',

type: "GET",

data: data,

success: function (arg){

var posTaggingresult = arg["posTaggingresult"]

document.getElementById("output").innerHTML = posTaggingresult

}

})

}else{

alert("請選擇訓練好的模型!")

}

}else{

alert("請輸入句子!")

}

}

document.getElementById("fitbutton").onclick = function(){

var modetype = document.getElementById("modetype").value

var saveName = document.getElementById("saveName").value

var epochs = document.getElementById("epochs").value

var batch_size = document.getElementById("batch_size").value

var lr = document.getElementById("lr").value

var decay = document.getElementById("decay").value

if(modetype&&saveName&&epochs&&batch_size&&lr&&decay){

var data = {'name': 'fit', "modetype": modetype, "saveName": saveName, "epochs": epochs,"batch_size":batch_size, "lr":lr, "decay":decay}

$.ajax({

url: 'ptapp/Home',

type: "GET",

data: data,

success: function (arg){

var trainScore = arg["trainScore"]

var testScore = arg["testScore"]

document.getElementById("trainScoreResult").innerText=trainScore

document.getElementById("testScoreResult").innerText=testScore

}

})

}else{

alert("請輸入完成訓練參數!")

}

}

</script>

</html>

在“模型選擇”區域需要先點擊“加載模型”按鈕,將fittedModels文件中保存的訓練好的模型展示出來,以供選擇用於詞性標注的模型。

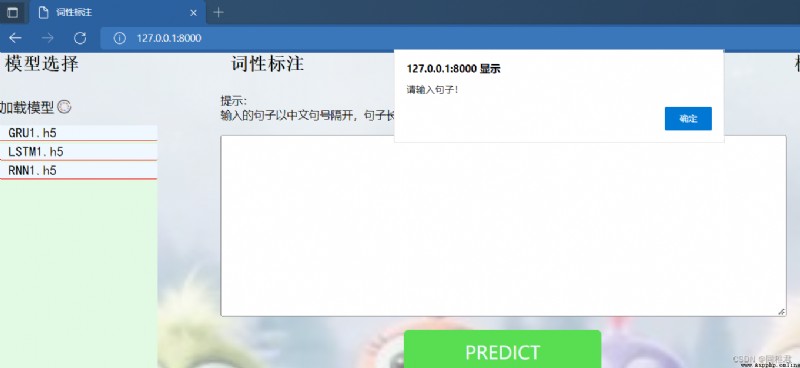

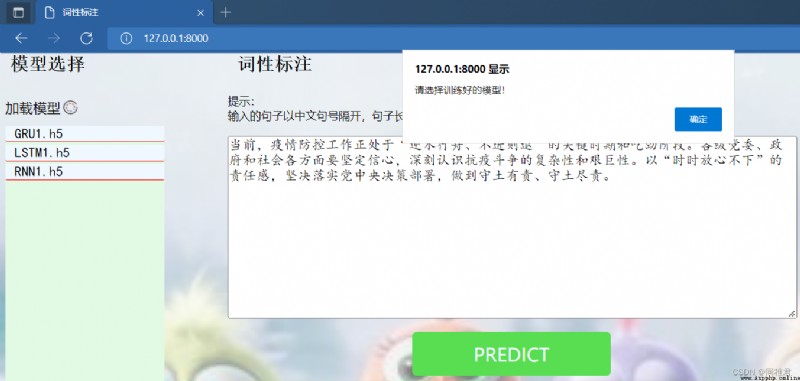

在中間“詞性標注”區域的輸入文本框中,輸入需要詞性標注的句子,在選擇好模型後,點擊“PREDICT”按鈕,即可將輸入句子以及模型名稱傳給後端,後端封裝好的詞性標注模型部分程序會調用用戶指定的模型來對用戶輸入的句子進行詞性標注,並將標注結果傳給前端,最終顯示在“PREDICT”按鈕下面的空白區域內。若用戶為輸入句子或未選中模型,當用戶點擊“PREDICT”按鈕時就會彈出對應提示。

選擇GRU1模型以及輸入完要標注的句子後,點擊“PREDICT”按鈕,標注結果如下:

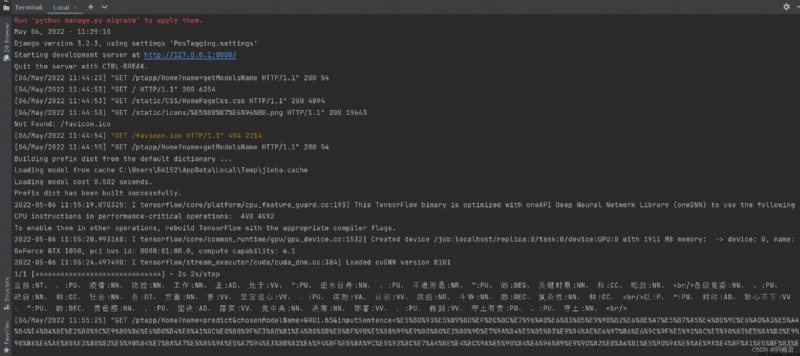

後端運行過程如下:

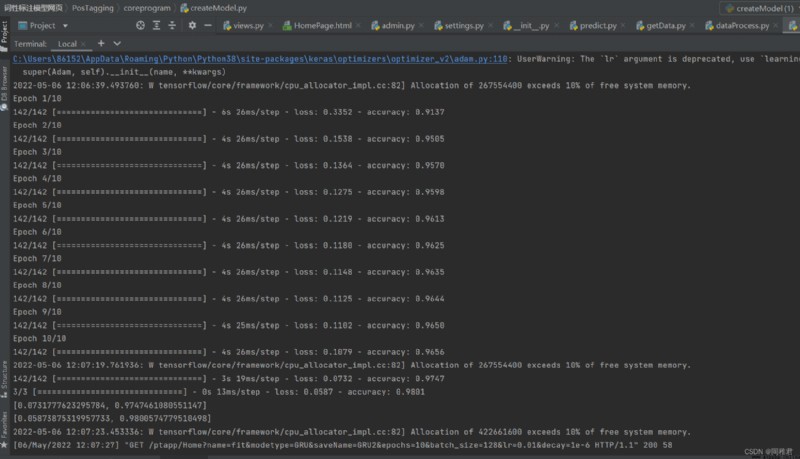

當前已有訓練好的LSTM1.h5、GRU1.h5、RNN1.h5模型,再訓練GRU2.h5模型的過程如下:

在“模型訓練”部分的文本框中對應地輸入各個參數,然後點擊“FIT”按鈕,即可開始訓練。

訓練完成後,點擊“加載模型”,即可將新訓練的模型顯示出來。

後端運行過程如下:

詞性標注模型網站項目——《PythonDjang搭建自動詞性標注網站》的實現-自然語言處理文檔類資源-CSDN下載

注意:需要在 “詞性標注模型網站項目\PosTagging\coreprogram\data”中將sgns.wiki.word.bz2解壓。

Django (II) exquisite blog construction (13) realize the message page and message function

Django (II) exquisite blog construction (13) realize the message page and message function

Preface This chapter focuses

[Python monitoring CPU] a super healing runcat monitoring application system has been launched ~ its very popular, and its appearance is full

[Python monitoring CPU] a super healing runcat monitoring application system has been launched ~ its very popular, and its appearance is full

Introduction hello ! Im kimik