The project utilizes SIFT The algorithm realizes the splicing of two pictures !

stay python terminal Window input the following command , Generate dependent documents :

pip freeze > requirement.txt

The corresponding dependent documents are as follows :

numpy==1.22.4

opencv-contrib-python==4.6.0.66

opencv-python==4.6.0.66

For specific use, please refer to the article I wrote :python Project use requirement.txt Rely on to improve the migration efficiency of the project

Be careful :opencv-contrib-python Why do we need to install additional libraries , because SIFT It's a proprietary algorithm , If used , In general, you'd better configure this library to use , If there is no error prompt !

For subsequent use and debugging of algorithm logic , I encapsulate the common methods into ConPic Methods in class , In the main calling script , Instantiate the class first , Then call the methods in the class !

import cv2

import numpy as np

class ConPic:

def cv_show(self,name, image):

cv2.imshow(name, image)

cv2.waitKey(0)

cv2.destroyAllWindows()

# SIFT Method to detect feature points

def detect(self,image):

# establish SIFT generator

descriptor = cv2.xfeatures2d.SIFT_create()

# testing SIFT Characteristic point , And calculate the descriptors

(kps, features) = descriptor.detectAndCompute(image, None)

# Convert the result to NumPy Array

kps = np.float32([kp.pt for kp in kps])# List generator

# Return to feature point set , And corresponding description features

return (kps, features)

# Feature point matching

def Keypoints(self,kpsA, kpsB, featuresA, featuresB, ratio=0.75, reprojThresh=4.0):

# Build violence matchers

matcher = cv2.BFMatcher()

# Use KNN The test comes from A、B Of Graphs SIFT Feature matching ,K=2

rawMatches = matcher.knnMatch(featuresA, featuresB, 2)

matches = []

for m in rawMatches:

# When the ratio of the nearest distance to the next nearest distance is less than ratio When the value of , Keep this match

if len(m) == 2 and m[0].distance < m[1].distance * ratio:

# Store two points in featuresA, featuresB Index value in

matches.append((m[0].trainIdx, m[0].queryIdx))

# When the filtered match pair is greater than 4 when , Calculate the angle transformation matrix

if len(matches) > 4:

# Get the point coordinates of the matched pair

ptsA = np.float32([kpsA[i] for (_, i) in matches])

ptsB = np.float32([kpsB[i] for (i, _) in matches])

# Calculate the angle transformation matrix

(H, status) = cv2.findHomography(ptsA, ptsB, cv2.RANSAC, reprojThresh)

# Return results

return (matches, H, status)

# If the match pair is less than 4 when , return None

return None

# Image mosaic

def Matches(self,imageA, imageB, kpsA, kpsB, matches, status):

# Initialize visualization image , take A、B Connect left and right

(hA, wA) = imageA.shape[:2]

(hB, wB) = imageB.shape[:2]

vis = np.zeros((max(hA, hB), wA + wB, 3), dtype="uint8")

vis[0:hA, 0:wA] = imageA

vis[0:hB, wA:] = imageB

# Joint traversal , Draw a match

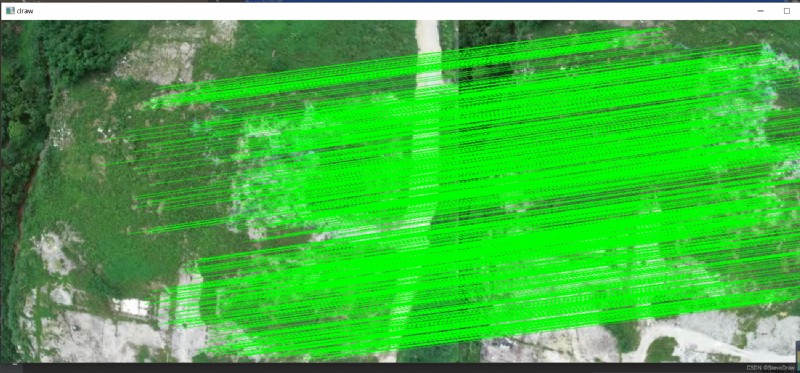

for ((trainIdx, queryIdx), s) in zip(matches, status):

# When the point pair match is successful , Draw on the visualization

if s == 1:

# Draw a match

ptA = (int(kpsA[queryIdx][0]), int(kpsA[queryIdx][1]))

ptB = (int(kpsB[trainIdx][0]) + wA, int(kpsB[trainIdx][1]))

cv2.line(vis, ptA, ptB, (0, 255, 0), 1)

self.cv_show("draw", vis)

# Return visualization results

return vis

# Splicing

def stitch(self,imageA, imageB, ratio=0.75, reprojThresh=4.0, showMatches=False):

# testing A、B The image SIFT Key feature points , And calculate the feature descriptors

(kpsA, featuresA) = self.detect(imageA)

(kpsB, featuresB) = self.detect(imageB)

# Match all the feature points of the two pictures , Return matching results

M = self.Keypoints(kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh)

# If the returned result is empty , There is no feature point matching success , Exit algorithm

if M is None:

return None

# otherwise , Extract matching results

# H yes 3x3 Perspective transformation matrix

(matches, H, status) = M

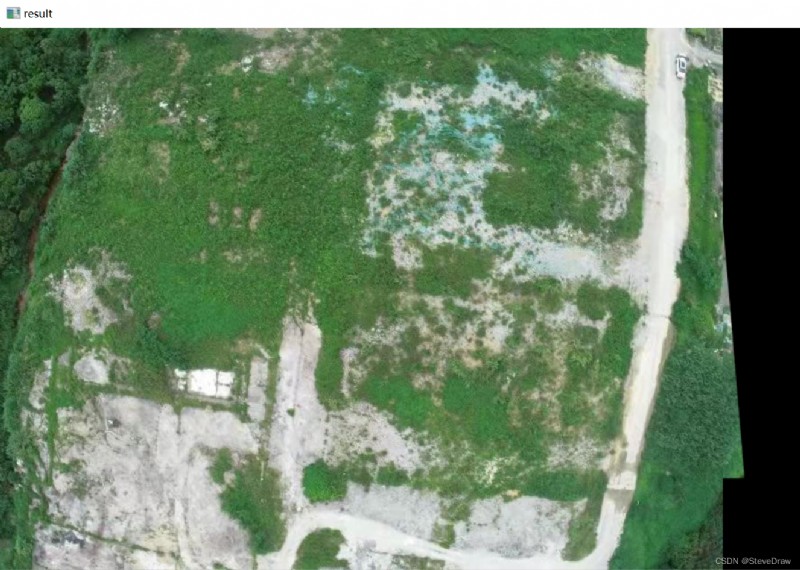

# The picture A Change the angle of view ,result It's the transformed picture

result = cv2.warpPerspective(imageA, H, (imageA.shape[1] + imageB.shape[1], imageA.shape[0]))

self.cv_show('result', result)

# The picture B Pass in result At the far left of the picture

result[0:imageB.shape[0], 0:imageB.shape[1]] = imageB

self.cv_show('result', result)

# Check if you need to show a picture match

if showMatches:

# Generate matching pictures

vis = self.drawMatches(imageA, imageB, kpsA, kpsB, matches, status)

# Return results

return (result, vis)

# Return matching results

return result

import cv2

from ConPic import ConPic

pic=ConPic()

# Read images

imageA = cv2.imread('D:/W-File/1.jpg')

pic.cv_show("imageA", imageA)

imageB = cv2.imread('D:/W-File/2.jpg')

pic.cv_show("imageB", imageB)

# Calculation SIFT Feature points and feature vectors

(kpsA, featuresA) = pic.detect(imageA)

(kpsB, featuresB) = pic.detect(imageB)

# A homography matrix is obtained based on nearest neighbor and random sampling consistency

(matches,H,status) = pic.Keypoints(kpsA, kpsB, featuresA, featuresB)

print(" Homotropic moment :{0}",H)

# Draw matching results

pic.Matches(imageA, imageB, kpsA, kpsB, matches, status)

# Splicing

pic.stitch(imageA, imageB)

Homography matrix :{

0} [[ 9.30067818e-01 -7.68408000e-02 3.15693821e+01]

[ 7.03517944e-03 8.84141290e-01 -9.47559365e+01]

[ 2.27565291e-06 -1.94163643e-04 1.00000000e+00]]

Finally, if there are deficiencies in the text , it is respectful to have you criticize and correct sth !