When integrated celery after , We can use celery Asynchronous tasks and scheduled tasks to handle us django Project logic . for example : Sending mail asynchronously and cleaning cache regularly , In my blog I use celery Wait until the configuration is completed to crawl the Douban shadow list , And controllable planning ( loop 、 timing 、 The clock ) type .

If you put celery Integrated into the django, utilize celery The asynchronous processing task of is very convenient to solve the logic that the site needs asynchronous processing , It should be noted that the latest celery4.x Can no longer be used django-celery 了

adopt pycharm newly build django Engineering, celeryproject

stay celeryproject/celeryproject New under the directory celery.py file , The contents are as follows :

from __future__ import absolute_import, unicode_literals

from celery import Celery

import os

# Configure the default django settings Module configuration to celery

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'celeryproject.settings')

app = Celery('celeryproject')

# Namespace

namespace='CELERY' Define all and celery The key names of the related configurations should be marked with 'CELERY_' The prefix .

app.config_from_object('django.conf:settings', namespace='CELERY')

# If you take all the task Are defined in separate tasks.py Module , Add this sentence celery These modules will be automatically discovered task,

app.autodiscover_tasks()

add to celeryproject/celeryproject The directory __init__.py The contents of the document are as follows :

from __future__ import absolute_import, unicode_literals

from .cerely import app as celery_app

__all__ = ['celery_app']

stay celeryproject/celeryproject In the catalog settings.py Add the following to the file :

CELERY_BROKER_URL = 'redis://127.0.0.1:6379/0'

CELERY_RESULT_BACKEND = 'redis://127.0.0.1:6379/1'

perform python manage.py startapp myapp Create a new one app

stay celeryproject/myapp New under the directory tasks.py The contents of the document are as follows :

from celery import shared_task

import time

@shared_task

def send_email():

print(" Simulate sending mail ")

import time

# The delay is added to simulate the time-consuming of sending mail

time.sleep(10)

return " Mail sent successfully "

stay myapp In the catalog view.py The contents added are as follows :

from django.http import HttpResponse

from myapp.tasks import send_email

def index(request):

result=send_email.delay()

return HttpResponse("ok!")

stay celeryproject/celeryproject Under the table of contents urls.py The file configuration route is as follows

from django.contrib import admin

from django.urls import path

from myapp import views

urlpatterns = [

path('admin/', admin.site.urls),

path('index/',views.index),

]

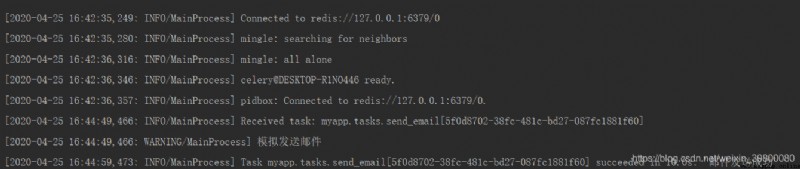

open terminal window , Execute the command in the root directory celery worker -A celeryproject -l info -P eventlet, The interface appears as follows , representative celery Start up normal

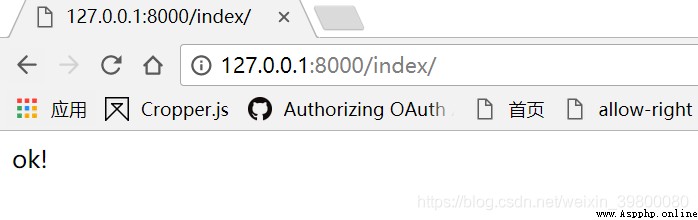

function django engineering , Browser access http://127.0.0.1:8000/index/

Due to the time waiting in the code that simulates sending mail , You can obviously feel the content of the browser ok When it shows up , go back to terminal window , It took a while before the message was sent successfully , It indicates that the task is indeed processed asynchronously

pip install celery django-celery-results

INSTALLED_APPS = [

...

'django_celery_results.apps.CeleryResultConfig',

]

CELERY_RESULT_BACKEND = 'django-db' # Store task results

python manage.py makemigrations

python manage.py migrate

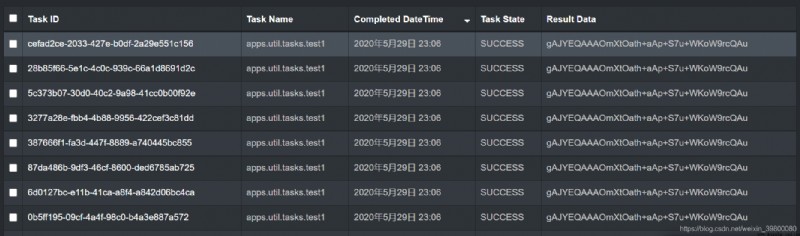

When the migration is complete ,django_celery_reuslts Will generate django_celery_results_taskresult surface , If a task is completed ,django_celery_results The data results will be stored in the table , The general table is as follows :

pip install django-celery-beat

INSTALLED_APPS = [

...

'django_celery_results.apps.CeleryResultConfig',

'django_celery_beat.apps.BeatConfig',

]

CELERY_TIMEZONE = TIME_ZONE # Keep the time zone consistent with the current project time zone

# CELERY_ENABLE_UTC=False

DJANGO_CELERY_BEAT_TZ_AWARE = False # Solve the time zone problem

CELERY_BROKER_URL = 'redis://127.0.0.1:6379/0'

# CELERY_RESULT_BACKEND = 'redis://127.0.0.1:6379/0' #redis Store task results

CELERY_RESULT_BACKEND = 'django-db' # Store task results

CELERY_TASK_SERIALIZER = 'pickle'

CELERY_RESULT_SERIALIZER = 'pickle'

CELERY_ACCEPT_CONTENT = ['pickle', 'json']

CELERY_BEAT_SCHEDULER = 'django_celery_beat.schedulers:DatabaseScheduler'

python manage.py makemigrations

python manage.py migrate

When the migration is complete ,django_celery_reuslts Five tables will be generated ( front 3 This table is more important )

And because we have integrated django_celery_reuslts, If we pass django_celery_beat When configuring tasks in the background , When the task is finished ,django_celery_results The data results will also be stored in the table

stay django Under the application directory of tasks.py file

from __future__ import absolute_import

from celery import shared_task

@shared_task

def test1():

print(" Test intermittent tasks ")

return " Intermittent tasks ok"

@shared_task

def test2():

print(" Test timed tasks ")

return " Timing task ok"

@shared_task

def test3():

print(" Test clock task ")

return " Clock task ok"

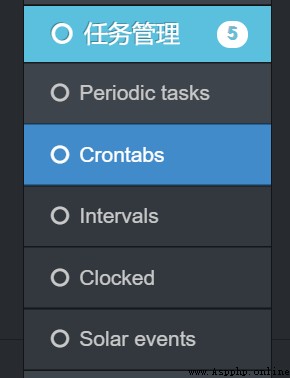

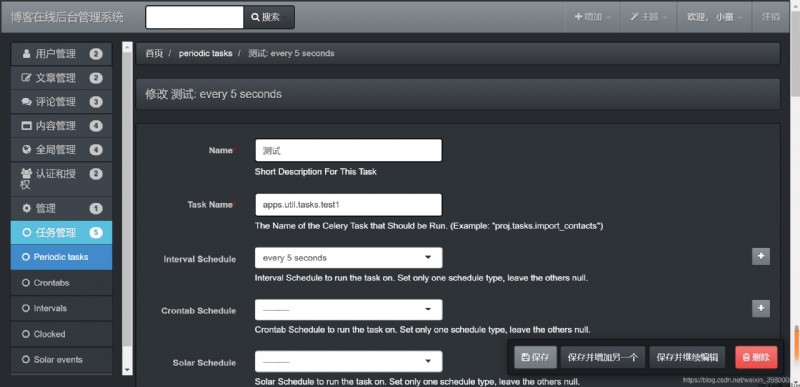

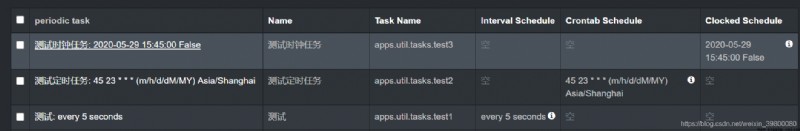

start-up django Access background

What I use here is xamdin, I'm going to django-celery-beat The model of is registered to xadmin 了

Local open redis service ( Be sure to turn it on )

Carry out orders celery worker -A tasks -l info -P eventlet

You can see that the task has been celery Identify to

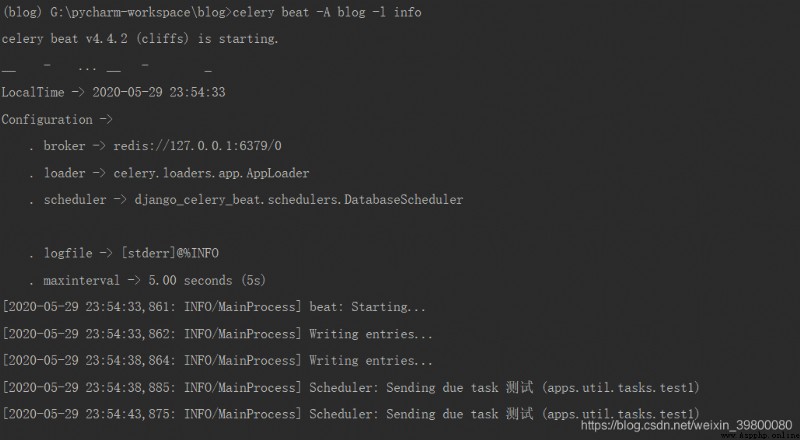

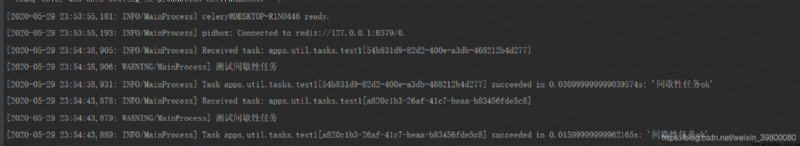

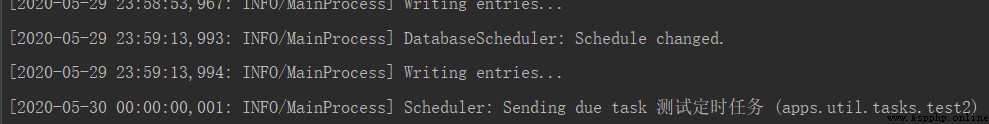

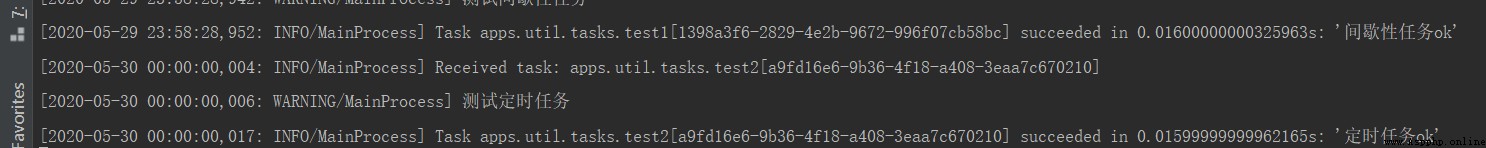

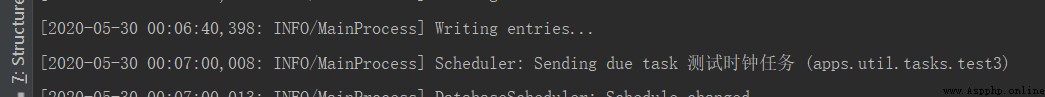

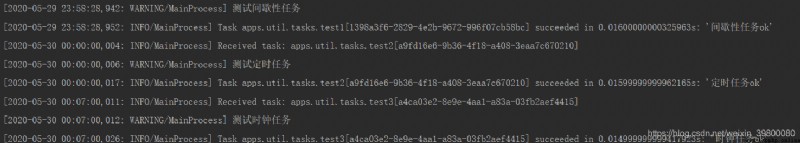

celery beat -A blog -l info You can see that the intermittent task has started to be sent , Cut back to worker Of terminal You can see the following interface

You can see that the intermittent task has started to be sent , Cut back to worker Of terminal You can see the following interface

Let's test , I am here django Create a new application in any application directory of tasks.py file , The contents are as follows

from celery import shared_task

@shared_task

def test3():

print(" test django Under the application of tasks.py Whether the tasks inside can be identified ")

Then I put it in the application directory tests.py Create a new task as follows :

from celery import shared_task

@shared_task

def test2():

print(" test django Under application tests.py Whether the tasks inside can be identified ")

Execute with celery Command to see the task list

celery worker -A blog -l info -P eventlet

give the result as follows :

[tasks]

. apps.util.tasks.test3

You can see from above celery Will automatically discover django Under the application directory tasks.py Tasks inside , No tasks.py The tasks inside cannot be identified

But I found a very strange problem , For example, in any application views.py It's like this.

from apps.util.tests import test2

We'll do it again celery Command to see the task list

celery worker -A blog -l info -P eventlet

give the result as follows :

[tasks]

. apps.util.tasks.test3

. apps.util.tests.test2

Find out celery I can recognize the task again . I guess this operation should be completed in disguise celery Of CELERY_IMPORTS To configure , We cancel the import just above , In the project settings.py Add the following configuration :

CELERY_IMPORTS=["apps.util.test"]

We'll do it again celery Command to see the task list

celery worker -A blog -l info -P eventlet

give the result as follows :

[tasks]

. apps.util.tasks.test3

. apps.util.tests.test2

That is to say, the task does not have to be placed in tasks.py In the file , And the task module does not have to be specified in the configuration file , You can import the module in the project .