The authors introduce :Python Quality creators in the field 、 Huawei cloud sharing expert 、 Alibaba cloud expert Blogger 、2021 year CSDN Blog star Top6

- This article has been included in Python Full stack series column :《100 Sky master Python From entry to employment 》

- This column is dedicated to Python A complete set of teaching prepared by zero foundation Xiaobai , from 0 To 100 Continuous advanced and in-depth learning , All knowledge points are linked

- Subscribe to the column and read later Python From entry to employment 100 An article ; You can also chat with 200 people in private Python Full stack communication group ( Teaching by hand , Problem solving ); Join the group to receive 80GPython Full stack tutorial video + 300 This computer book : Basics 、Web、 Reptiles 、 Data analysis 、 visualization 、 machine learning 、 Deep learning 、 Artificial intelligence 、 Algorithm 、 Interview questions, etc .

- Join me to learn and make progress , One can walk very fast , A group of people can go further !

For individuals :

When I see some excellent pictures that make people's blood spurt . Always want to save it for future wallpaper on the desktop

When browsing some important data ( All walks of life ), I hope to keep it and add luster to my sales activities in the future . When browsing some strange and powerful videos , Hope to save it in the hard disk for future appreciation

When browsing some excellent singing tracks , I hope it can be preserved for us to add a wonderful part to our boring life

For reptile Engineers :

Company data requirements

Data analysis

Smart product exercise data

Reptiles must use Python Well ? no ~ use Java It's OK ,C It's fine too . Please remember , Programming languages are just tools . Capturing data is your goal. You can use any tool to achieve your goal . Just like eating , You can use a fork or chopsticks , The end result is that you can eat . Then why do most people like to use Python Well ? answer : because Python Writing about reptiles is simple . incomprehension ? ask : Why not eat rice with a knife and fork ? Use chopsticks ? Because it's easy to use !

and Python Is one of many programming languages , Xiaobai is the quickest , The grammar is the simplest , what's more , There are a lot of third-party support libraries that reptiles can use. To be frank, you eat with chopsticks , I also give you a servant to help you eat ! Is it better to eat like this . It's easier ~

First , Reptiles are not prohibited by law . In other words, the law allows reptiles to exist, but , Reptiles are also at risk of breaking the law, just like kitchen knives , The law allows the existence of kitchen knives . But if you use it to cut people , I'm sorry , No one is used to you, just like Wang Xin said , Technology is innocent . It mainly depends on what you do with it. For example, some people use reptiles + Some hacking techniques are aimed at every second bb It's definitely not allowed to roll over 18000 times .

Reptiles are divided into friendly reptiles and malicious reptiles :

Sum up , To avoid oranges We still have to keep to our old ways and constantly optimize our crawler programs to avoid interfering with the normal operation of the website , Well and when using the climbed data , When discovering sensitive content involving user privacy and trade secrets , We must stop crawling and spreading in time .

Anti climbing mechanism : Portal site , It can be achieved by formulating corresponding strategies or technical means , Prevent crawlers from crawling the website data .

Anti-crawl strategy : Crawler programs can be developed through relevant strategies or technical means , Crack the anti crawling mechanism in portal website , Thus, relevant data in the user website can be obtained . Personal advice : Don't force yourself to climb backwards , Malicious crawlers may have been involved =

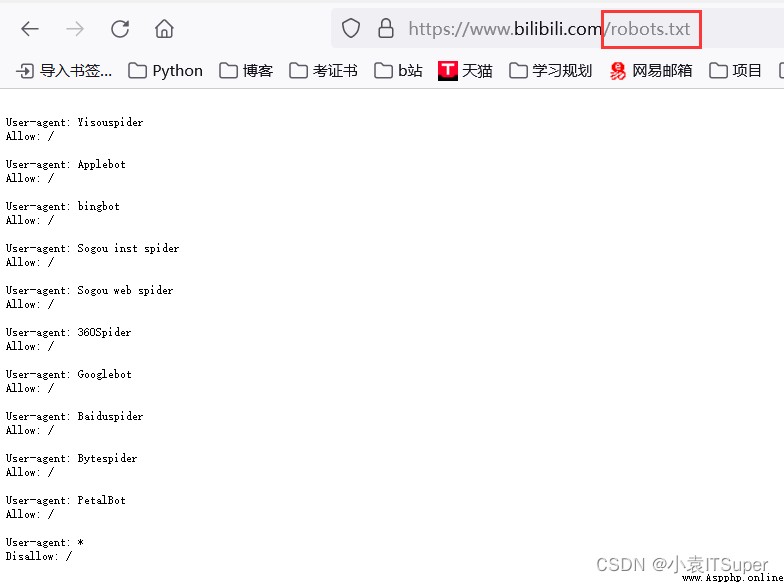

robots.txt agreement : A gentleman's agreement , It specifies which data in the website can be crawled and which data can not be crawled .

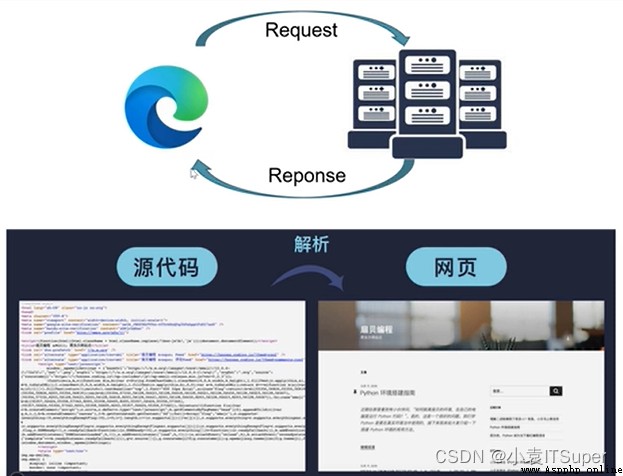

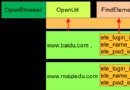

Schematic diagram of reptile :

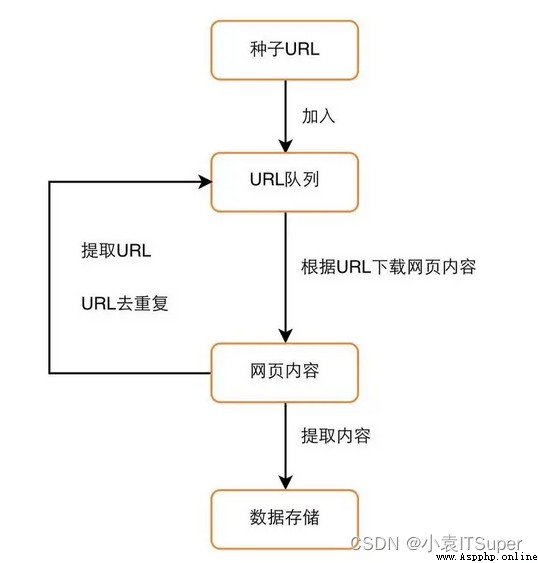

Reptile flow chart :

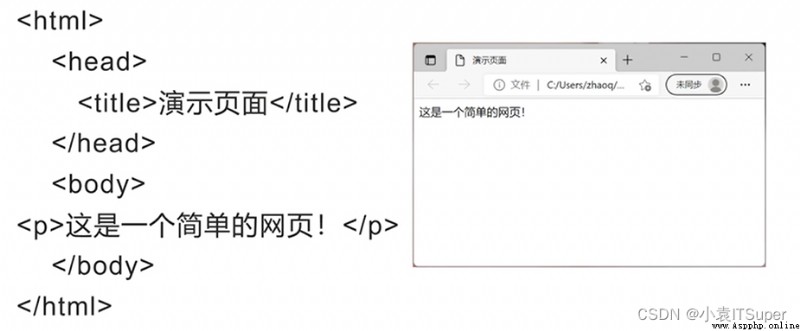

(1)HTML Full name “ Hypertext markup language ", It is different from programming language , No logical structure , Use markup to build web pages , Use <> Enclose the mark

HTML The label is as follows :

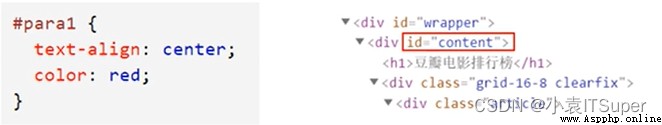

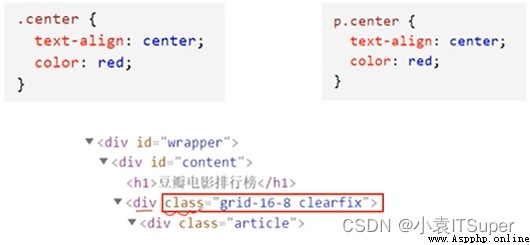

<p> Paragraph marks <a> Hyperlinks href Hyperlink address <img> picture src Picture storage path <span> In line labels <li> List item <div> Divide HTML block <table> Table Tags <tr> Line marker <td> Column markers h1~h6 title (2)CSS Basics

{} Enclose style definitions CSS Selectors :

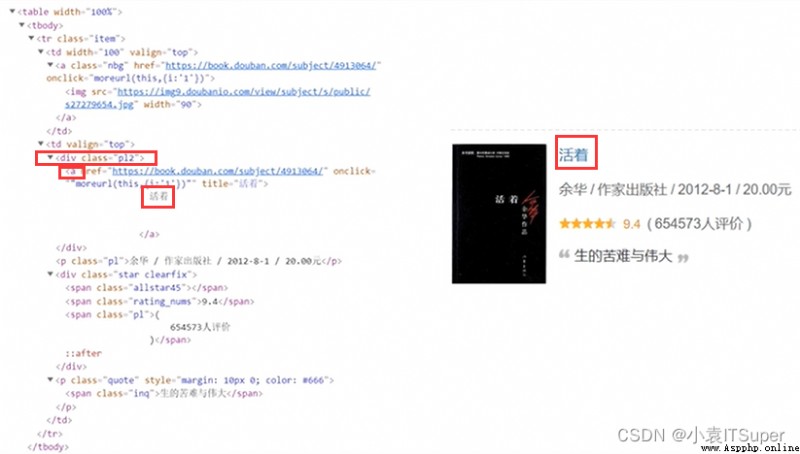

div class='p12', Take down a Under the label The text of

Case website :https://baike.baidu.com/item/%E8%99%8E/865?fromtitle=%E8%80%81%E8%99%8E&fromid=65781

URL( website ) yes Uriform Resource Locator Abbreviation , Uniform resource locator .URL It consists of the following parts :

httpsbaike.baidu.com865?? All the contents after ). Such as :fromtitle=%E8%80%81%E8%99%8E&fromid=65781, In the form of key value pairs , Multiple key value pairs & separate # What follows , Request data according to the wrong point https://music.163.com/#/friendHTTP agreement : The full name is HyperText Transfer Protocal , Hypertext transfer protocol , It's one Kind of publish and receive HTML (HyperText Markuup Language) Page method . The server port number is :80

HTTPS agreement : Full name : Hyper Text Transfer Protocol over SecureSocket Layer, yes HTTP Encrypted version of the protocol , stay HTTP Next to join the SSL layer , The server port number is :443

Reference for more knowledge points : Graphic network protocol

http The protocol stipulates that the browser must choose an interactive mode in the process of data interaction with the server . stay HTTP Agreement , Eight request modes are defined , Common are get Ask for something to do with post request .

GET request : In general , Just get the data from the server , Will not have any impact on the server resources will use get request

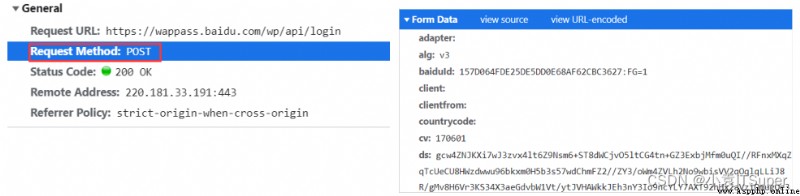

POST request : Send data to the server ( Sign in )、、 Upload files, etc , It will be used when it has an impact on server resources Post request . The request parameters are in Form Data in

http Agreement , Send to server - A request , The data is divided into three parts :

Common request header parameters :

user-agent: Browser name referer: Indicates which... The current request is from ur| Over here cookie:http Protocol is stateless . That is, the same person sent two requests . The server doesn't have the ability to know if the two requests are from the same person . And bring cookie It can be identified as a logged in user or the same request twice Open Google browser : Right click - 》 Check

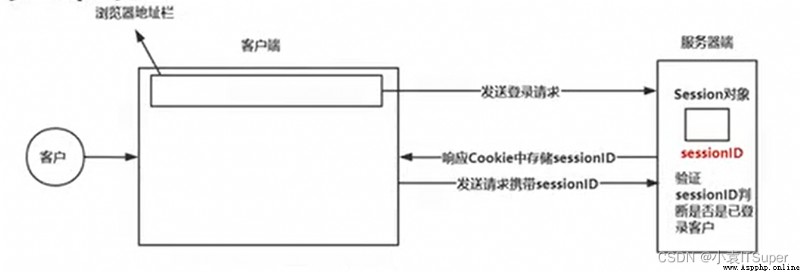

Session And Cookie It's used to keep HTTP Long-term Connection state technology

Session:

Cookie: It is generated by the server and sent to the client ( Usually Browse ), Cookie total It's insurance

There are clients

Cookie The basic principle of :

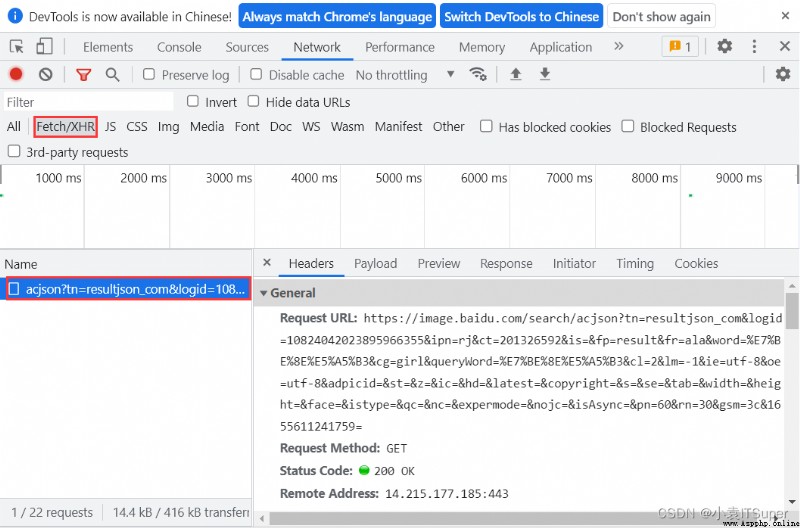

Ajax In the browser with Web Asynchronous data transfer between servers . This allows the web page to request a small amount of information from the server , Not the whole page .

Ajax Technology independent of browser and platform .

Ajax The general return is JSON, Direct pair Ajax address Post or get, Just go back to JSON Data.

Judge whether it is Ajax Generate the data , See if there is a refresh when scrolling the page , If the page is not refreshed, the description data is automatically generated , Namely Ajax Rendered to the interface

Niuke, a little partner who dislikes the blogger's slow update, brushes the questions by himself

1. Programming white contestant

Many novice programmers have learned basic grammar , But I don't know the purpose of grammar , I don't know how to deepen the image , I don't know how to improve myself , This is the time It's very important to brush one question independently every day ( Refining into a God ), You can go to the introductory training of programming beginners on Niuke online . This topic is at the entry level of programming , Suitable for Xiaobai who has just learned grammar , The topic involves basic grammar of programming , Basic structure, etc , Each question has practice mode and examination mode , The test mode can be restored for simulation , You can also practice through practice mode .

Link address : Cattle from | Beginner programming training

2. Advanced programming player

When you have gradually mastered the key points of knowledge after basic practice , Go to this time Learn data structures in special exercises 、 Algorithm basis 、 Fundamentals of computer etc. . Start with the simple , If you feel up, do it in medium difficulty , And more difficult topics . These three are the knowledge points that must be tested in the interview , We can only insist on practicing more every day by ourselves , Refuse to lie flat and continue to brush questions , Continuously improve yourself to impact a satisfactory company .

Link address : Cattle from | Special exercises

Speed up , Let's attack the big factory together , If you have questions, leave a message in the comment area to answer !!!

Is it late to switch to Python development at the age of 30? And zero basis

Is it late to switch to Python development at the age of 30? And zero basis

Recently received a private le

18th: the first python+selenium automated test practice project (rough)

18th: the first python+selenium automated test practice project (rough)

One . explain : This project a