//讓程序自己學會是否需要進位,從而學會加法

#include "iostream"

#include "math.h"

#include "stdlib.h"

#include "time.h"

#include "vector"

#include "assert.h"

using namespace std;

#define innode 2 //輸入結點數,將輸入2個加數

#define hidenode 16 //隱藏結點數,存儲“攜帶位”

#define outnode 1 //輸出結點數,將輸出一個預測數字

#define alpha 0.1 //學習速率

#define binary_dim 8 //二進制數的最大長度

#define randval(high) ( (double)rand() / RAND_MAX * high )

#define uniform_plus_minus_one ( (double)( 2.0 * rand() ) / ((double)RAND_MAX + 1.0) - 1.0 ) //均勻隨機分布

int largest_number = ( pow(2, binary_dim) ); //跟二進制最大長度對應的可以表示的最大十進制數

//激活函數

double sigmoid(double x)

{

return 1.0 / (1.0 + exp(-x));

}

//激活函數的導數,y為激活函數值

double dsigmoid(double y)

{

return y * (1 - y);

}

//將一個10進制整數轉換為2進制數

void int2binary(int n, int *arr)

{

int i = 0;

while(n)

{

arr[i++] = n % 2;

n /= 2;

}

while(i < binary_dim)

arr[i++] = 0;

}

class RNN

{

public:

RNN();

virtual ~RNN();

void train();

public:

double w[innode][hidenode]; //連接輸入層與隱藏層的權值矩陣

double w1[hidenode][outnode]; //連接隱藏層與輸出層的權值矩陣

double wh[hidenode][hidenode]; //連接前一時刻的隱含層與現在時刻的隱含層的權值矩陣

double *layer_0; //layer 0 輸出值,由輸入向量直接設定

//double *layer_1; //layer 1 輸出值

double *layer_2; //layer 2 輸出值

};

void winit(double w[], int n) //權值初始化

{

for(int i=0; i layer_1_vector; //保存隱藏層

vector layer_2_delta; //保存誤差關於Layer 2 輸出值的偏導

for(epoch=0; epoch<11000; epoch++) //訓練次數

{

double e = 0.0; //誤差

for(i=0; i=0 ; p--)

{

layer_0[0] = a[p];

layer_0[1] = b[p];

layer_1 = layer_1_vector[p+1]; //當前隱藏層

double *layer_1_pre = layer_1_vector[p]; //前一個隱藏層

for(k=0; k=0; k--)

cout << d[k];

cout << endl;

cout << "true:" ;

for(k=binary_dim-1; k>=0; k--)

cout << c[k];

cout << endl;

int out = 0;

for(k=binary_dim-1; k>=0; k--)

out += d[k] * pow(2, k);

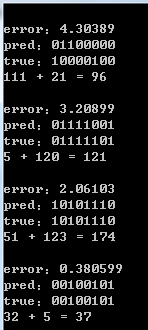

cout << a_int << " + " << b_int << " = " << out << endl << endl;

}

}

}

int main()

{

srand(time(NULL));

RNN rnn;

rnn.train();

return 0;

}

參考:

http://blog.csdn.net/zzukun/article/details/49968129

http://www.cnblogs.com/wb-DarkHorse/archive/2012/12/12/2815393.html