Dimensionality reduction is a preprocessing method for high-dimensional feature data . There are many dimensionality reduction algorithms , Like singular value decomposition (SVD)、 Principal component analysis (PCA)、 Factor analysis (FA)、 Independent component analysis (ICA), This section focuses on the introduction PCA Principal component analysis .

Principal component analysis algorithm (PCA) It is the most commonly used linear dimensionality reduction method , Its goal is through some kind of linear projection , Mapping high-dimensional data to low-dimensional space , It is expected that the amount of information of the data is the largest in the projected dimension ( The largest variance ), In order to use fewer data dimensions , At the same time, it retains the characteristics of more original data points .PCA Principal component analysis is an unsupervised learning algorithm, which does not involve the fitting of classification labels .

from sklearn.decomposition import PCA

# Set the main component quantity as 2 So that we can visualize

pca = PCA(n_components=2)

pca.fit(X_scaled)

X_pca = pca.transform(X_scaled)

print(X_pca.shape)

X0 = X_pca[wine.target==0]

plt.scatter(X2[:,0],X2[:,1],c='r',s=60,edgecolor='k')

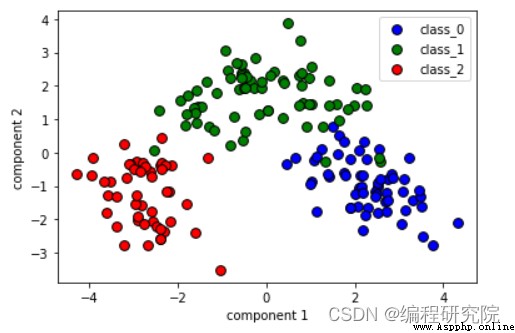

plt.legend(wine.target_names, loc='best')

plt.xlabel('component 1')

plt.ylabel('component 2')

plt.show()The output result is shown in the figure

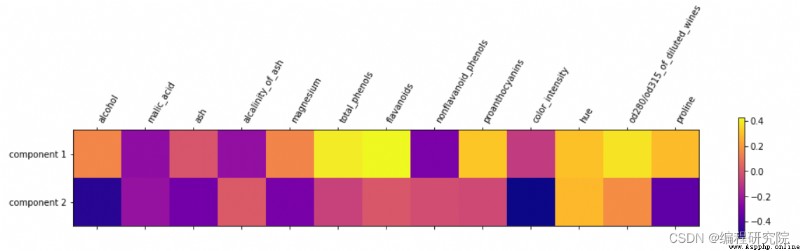

Original features and PCA The relationship between principal components

plt.matshow(pca.components_, cmap='plasma')

plt.yticks([0,1],['component 1','component 2'])

plt.colorbar()

plt.xticks(range(len(wine.feature_names)),wine.feature_names,

rotation=60,ha='left')

plt.show()

Result analysis : In the two principal components , It involves all 13 Features , If the number corresponding to a feature is positive , It shows that there is a positive correlation between it and the principal components , If it is negative, it is the opposite .

Friends who want complete code , can toutiao Search on “ Programming workshop ” After attention s Believe in me , reply “ Algorithm notes 14“ Free access