1、 two-way RNN

2、 Stacked two-way RNN

3、 two-way LSTM Realization MNIST Data set classification

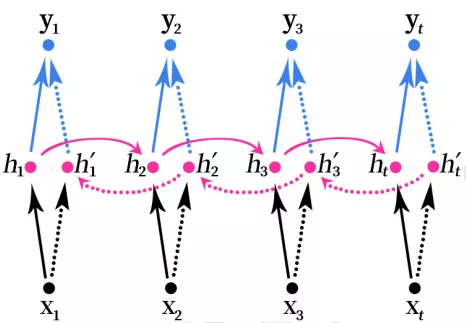

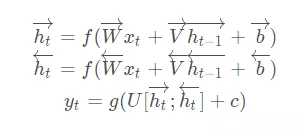

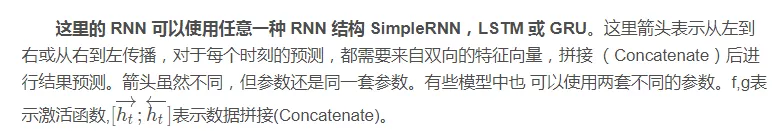

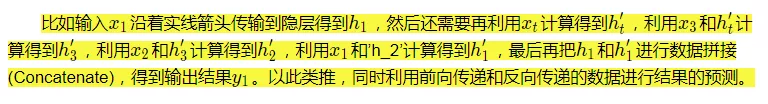

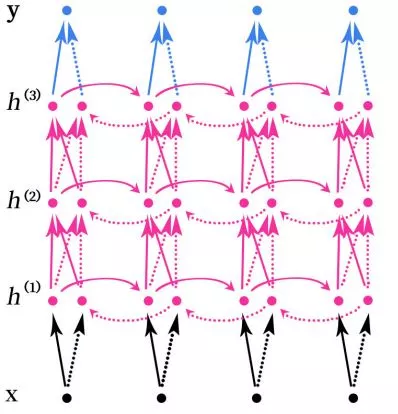

1、 two-way RNNtwo-way RNN(Bidirectional RNN) The structure of is shown in the figure below .

Bidirectional RNN At the same time “ In the past ” and “ future ” Information about . The above figure shows a sequence with a length of 4 Two way RNN structure .

two-way RNN It's like reading an article from the beginning to the end when we do reading comprehension , Then read the article again from the back , Then do the question . It is possible to have a new and different understanding when reading the article again from the back to the front , Finally, the model may get better results .

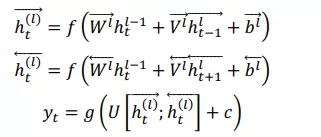

2、 Stacked two-way RNN

Stacked two-way RNN(Stacked Bidirectional RNN) Its structure is shown in the figure above . The picture above is a stack 3 A hidden layer RNN The Internet .

Be careful , The stacking here is bidirectional RNN There is not only two-way RNN Can be stacked , Actually arbitrary RNN Can be stacked , Such as SimpleRNN、LSTM and GRU These recurrent neural networks can also be stacked .

Stacking means in RNN Multiple layers are superimposed in the structure of , Be similar to BP Multiple layers can be superimposed in Neural Networks , Increase the nonlinearity of the network .

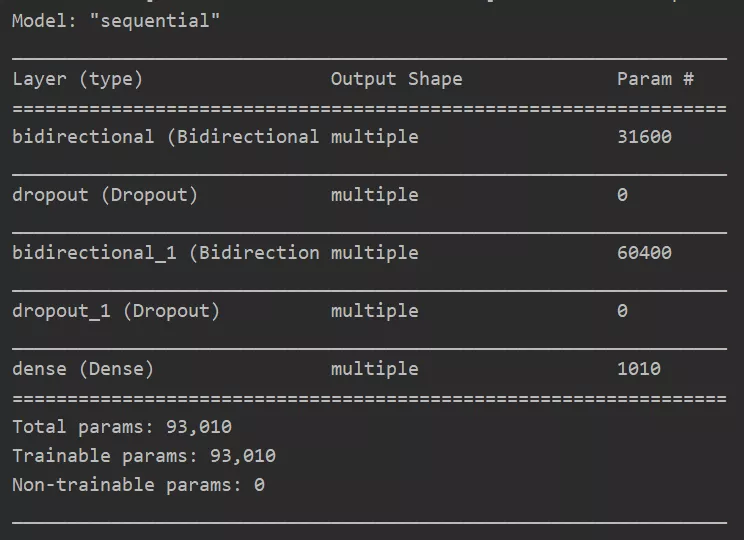

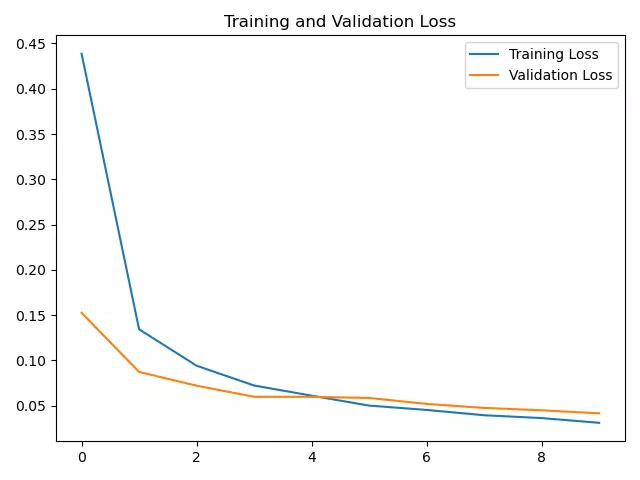

3、 two-way LSTM Realization MNIST Data set classificationimport tensorflow as tffrom tensorflow.keras.models import Sequentialfrom tensorflow.keras.layers import Densefrom tensorflow.keras.layers import LSTM,Dropout,Bidirectionalfrom tensorflow.keras.optimizers import Adamimport matplotlib.pyplot as plt# Loading data sets mnist = tf.keras.datasets.mnist# Load data , The training set and test set have been divided when the data is loaded # Training set data x_train The data shape of is (60000,28,28)# Training set label y_train The data shape of is (60000)# Test set data x_test The data shape of is (10000,28,28)# Test set label y_test The data shape of is (10000)(x_train, y_train), (x_test, y_test) = mnist.load_data()# Normalize the data of training set and test set , It helps to improve the training speed of the model x_train, x_test = x_train / 255.0, x_test / 255.0# Turn the labels of training set and test set into single hot code y_train = tf.keras.utils.to_categorical(y_train,num_classes=10)y_test = tf.keras.utils.to_categorical(y_test,num_classes=10)# data size - The line has 28 Pixel input_size = 28# Sequence length - Altogether 28 That's ok time_steps = 28# Hidden layer memory block Number cell_size = 50 # Creating models # The data input of the recurrent neural network must be 3 D data # The data format is ( Number of data , Sequence length , data size )# Loaded mnist The format of the data just meets the requirements # Notice the input_shape There is no need to set the quantity of data when setting the model data input model = Sequential([ Bidirectional(LSTM(units=cell_size,input_shape=(time_steps,input_size),return_sequences=True)), Dropout(0.2), Bidirectional(LSTM(cell_size)), Dropout(0.2), # 50 individual memory block Output 50 Value and output layer 10 Neurons are fully connected Dense(10,activation=tf.keras.activations.softmax)])# The data input of the recurrent neural network must be 3 D data # The data format is ( Number of data , Sequence length , data size )# Loaded mnist The format of the data just meets the requirements # Notice the input_shape There is no need to set the quantity of data when setting the model data input # model.add(LSTM(# units = cell_size,# input_shape = (time_steps,input_size),# ))# 50 individual memory block Output 50 Value and output layer 10 Neurons are fully connected # model.add(Dense(10,activation='softmax'))# Define optimizer adam = Adam(lr=1e-3)# Define optimizer ,loss function, The accuracy of calculation during training Using the cross entropy loss function model.compile(optimizer=adam,loss='categorical_crossentropy',metrics=['accuracy'])# Training models history=model.fit(x_train,y_train,batch_size=64,epochs=10,validation_data=(x_test,y_test))# Print model summary model.summary()loss=history.history['loss']val_loss=history.history['val_loss']accuracy=history.history['accuracy']val_accuracy=history.history['val_accuracy']# draw loss curve plt.plot(loss, label='Training Loss')plt.plot(val_loss, label='Validation Loss')plt.title('Training and Validation Loss')plt.legend()plt.show()# draw acc curve plt.plot(accuracy, label='Training accuracy')plt.plot(val_accuracy, label='Validation accuracy')plt.title('Training and Validation Loss')plt.legend()plt.show()This may be easier to deal with text data , It's a little reluctant to use this model here , Just a simple test .

Model summary :

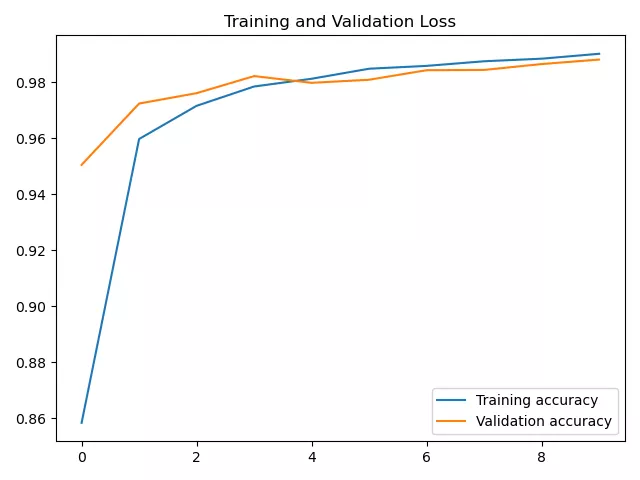

acc curve :

loss curve :

This is about Python Achieve two-way RNN Two way with stacking RNN This is the end of the article on the sample code of , More about Python two-way RNN Please search the previous articles of software development network or continue to browse the relevant articles below. I hope you will support software development network more in the future !