It's boring to stay at home during holidays , When browsing the website, I found a picture website , There is a nice little sister on it , The picture size is also high-definition , Just want to write a simple reptile to catch the picture .

Mainly used requests Library, let's briefly talk about the process .

Here I will use the most commonly used “request.get()” Method to request this website , See if you can get html object , The code is as follows :

import requests

header1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/97.0.4692.99 Safari/537.36'}

res = requests.get('http://www.xxxxxxxx.com/forum-43-2.html', headers=header1) # The website is blocked here

html = res.text

print(html)

After a simple run, it is found that html result , Explain that you can get the response of the website by using this header to request , This header file is then used for all requests .

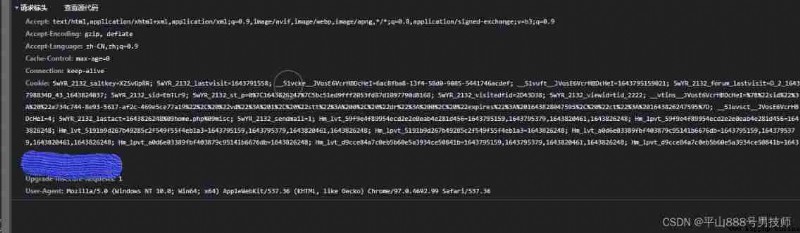

The header file can be found in the developer options of the browser , I use it Chrome browser , Open the... Here User Agent You can get the header file , The script can request the web server in the form of a browser , Otherwise, the default request is requests library . If the website has anti - crawling, you can use a proxy IP To climb .

After getting the return of the website , We need to find the location of the picture , stay Chrome Middle right click to check , You can get this picture in html Label position in the source code , We copy this label , Use re Library findall Method to construct a simple regular expression to match the image source address we need , The code is as follows :

URL_list = re.findall('<a href="(.*?)" οnclick=".*?" title=".*?" class="z"', html)

print(URL_list)

We use the address we want to match () Match it up , The rest do not need to be added ().

After printing, it is found that the matched elements need to be added with the website address to form a complete picture source address , Just construct a new list .

So we get to the source address of each picture .

LIST_PIC_URL = []

for url in pic_url_list:

pic_url_list[i] = URL_Header + pic_url_list[i]

LIST_PIC_URL.append(pic_url_list[i])

i = i + 1

print(LIST_PIC_URL)

Once you have the source address, you can save the picture directly , So here I'm going to use theta with.open Method , This is also a common way to save pictures , You also need to request this image from the server , Then save the returned object .path Is the defined image storage location , Picture name / After the name . In this way, we will save all the pictures of the current page .

for PIC_URL in LIST_PIC_URL:

file_name = PIC_URL.split('/')[-1]

RES = requests.get(PIC_URL, headers=header1)

with open(path + file_name, 'wb') as f:

f.write(RES.content)

We encapsulate the above steps as a function , That is, I pass in a page , This function can save all the pictures of the page , The code is as follows :

import requests

import re

def save_img(URL):

path = r'J:\pic\mj'

header1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/97.0.4692.99 Safari/537.36'}

url1 = URL

URL_Header = 'http://www.xxxxxxxx.com/' # The matched list elements need to add this to form a url

res = requests.get(url1, headers=header1)

html_list = res.text

pic_url_list = re.findall('<img id=".*?" aid=".*?" src=".*?" zoomfile=".*?" file="(.*?)" class=".*?" οnclick=".*?" width=".*?" alt=".*?" title=".*?" w=".*?" />',html_list)

i = 0

LIST_PIC_URL = []

for url in pic_url_list:

pic_url_list[i] = URL_Header + pic_url_list[i]

LIST_PIC_URL.append(pic_url_list[i])

i = i + 1

print(LIST_PIC_URL)

for PIC_URL in LIST_PIC_URL:

file_name = PIC_URL.split('/')[-1]

RES = requests.get(PIC_URL, headers=header1)

with open(path + file_name, 'wb') as f:

f.write(RES.content)

return

The website is divided into many kinds of pictures , Here we introduce the youth pictures .

Youth pictures share 15 page , Click on the page tab to know the... Of each page url Show regular changes , And each page contains 20 The preview image of the image can be crawled in more than steps url, In this way, we only need to construct the url, And then crawl out the preview picture url Transfer to the encapsulated function above , We can realize this 15 Batch crawling of pages , No more details here , The method is the same as above , Go straight to the code :

import requests

import re

import getpic # The previously encapsulated function is stored in another py In file , Call its method after importing

header1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/97.0.4692.99 Safari/537.36'}

k = 1

URL_O = 'http://www.xxxxxxxx.com/forum-43-' + str(k) + '.html'

# print(URL_O)

URL_H = 'http://www.xxxxxxxx.com/'

LIST_URL_O = []

for k in range(1, 16):

LIST_URL_O.append('http://www.xxxxxxxx.com/forum-43-' + str(k) + '.html')

# print(LIST_URL_O)

p = 0

LIST = []

for p in range(len(LIST_URL_O)):

res = requests.get(LIST_URL_O[p], headers=header1)

html = res.text

URL_list = re.findall('<a href="(.*?)" οnclick=".*?" title=".*?" class="z"', html)

print(URL_list)

print(len(URL_list))

for i in range(len(URL_list)):

URL_list[i] = URL_H + URL_list[i]

# print(URL_list[i])

LIST.append(URL_list[i])

i = i + 1

p = p + 1

print(LIST)

j = 0

for URL in LIST:

getpic.save_img(LIST[j])

j = j + 1

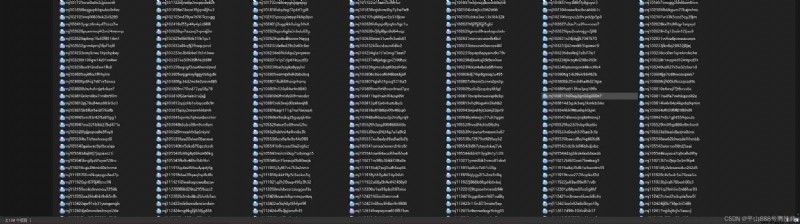

After running, you can get the picture , You can see a total of more than 2000 pictures , And they are all large pictures .

Please correct the deficiencies , thank !