Bloggers have studied a lot python Code for , It is found that the video memory cannot be released , Or the release of video memory is not complete . For this purpose, customization is implemented dll library , from python Call to release the video memory . Support pytorch、tensorflow、onnxruntime etc. cuda Running environment , Its operation effect is similar to ai Frame independent , from cuda C++ API decision . among , Customize dll Library export to python You can refer to vs2019 Export dynamic link library (dll) To others vs Project and python Code using _ A flash of hope to my blog -CSDN Blog adopt vs You can export dynamic link libraries (dll file ) To others c++ project 、c# project 、python Project use . This case will realize vs Export project as dynamic link library , to c++ Project and python Project use . Involving global variables 、 function 、 Custom class export . After the project is created, you will get the following structure , You can write the core code in dllmain.cpp Inside ( The original content can be ignored ), The header file information is written in pch.h The following contents can be copied to pch.h in ( The blogger's code involves cuda, So you need to configure the following cuda,cuda The configuration of can refer to libtorch Example of video memory management _ A flash of hope to my blog -CSDN Blog , various  https://hpg123.blog.csdn.net/article/details/125396626

https://hpg123.blog.csdn.net/article/details/125396626

Used in the following code dll The file can be downloaded at the following link , You can also refer to the above link to realize it by yourself .

python Release cuda Cache Library - Deep learning document resources -CSDN download Dynamic link library implemented by bloggers , adopt python After import, you can release the video memory , And ai Frame independent . Support pytorch More download resources 、 For learning materials, please visit CSDN Download channel . https://download.csdn.net/download/a486259/85725926

https://download.csdn.net/download/a486259/85725926

These are called cuda, So the computer needs to be configured cuda Environmental Science . meanwhile , Pay attention to modifying according to your actual situation dll Path to file . Besides , The code uses pynvml Perform a video memory query , If the computer is not installed, please use pip install pynvml Installation .

import ctypes

import os

import pynvml

pynvml.nvmlInit()

def get_cuda_msg(tag=""):

handle = pynvml.nvmlDeviceGetHandleByIndex(0)

meminfo = pynvml.nvmlDeviceGetMemoryInfo(handle)

print(tag, ", used:",meminfo.used / 1024**2,"Mib","free:",meminfo.free / 1024**2,"Mib")

#os.environ['Path']+=r'C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.1\bin'

#python3.7 Versions above use the following code to add dependencies dll The path of

os.add_dll_directory(r'C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.1\bin')

lib = ctypes.cdll.LoadLibrary(os.getcwd()+ "/dll_export.dll")

#win32api.FreeLibrary(libc._handle) # It is found that the program cannot exit normally at the end of running dll, You need to explicitly release dll

#lib.reset_cuda()The model built here is just a demo, There is no practical significance , You can use your own models and frameworks .

import torch

import torch.nn as nn

import torch.nn.functional as F

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = nn.Conv2d(6, 256, kernel_size=3, stride=1, padding=0)

self.relu = nn.ReLU()

self.conv2 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=0)

self.conv3 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=0)

self.conv4 = nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=0)

self.conv5 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=0)

self.conv61 = nn.Conv2d(512, 3, kernel_size=3, stride=1, padding=0)

self.conv62 = nn.Conv2d(512, 3, kernel_size=3, stride=1, padding=0)

self.global_pooling = nn.AdaptiveAvgPool2d(output_size=(1, 1))

# hypothesis x1 And x2 The number of channels of is 3

def forward(self, x1, x2):

x = torch.cat([x1, x2], dim=1)

x = self.conv1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x1= self.conv61(x)

x2= self.conv62(x)

x1 = self.global_pooling(x1).view(-1)

x2 = self.global_pooling(x2).view(-1)

x1 = F.softmax(x1,dim=0)

x2 = F.softmax(x2,dim=0)

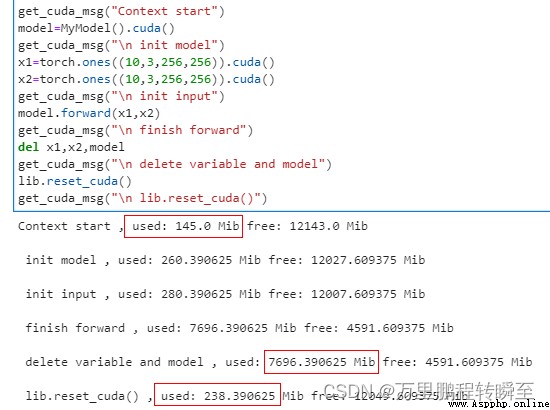

return x1,x2The code and running results are as follows , Contains model initialization 、 Variable initialization 、forword after 、del After change 、 After the video memory is released (reset_cuda)5 Changes of video memory before and after the States . You can see the call lib.reset_cuda The rear video memory has basically been restored .

get_cuda_msg("Context start")

model=MyModel().cuda()

get_cuda_msg("\n init model")

x1=torch.ones((10,3,256,256)).cuda()

x2=torch.ones((10,3,256,256)).cuda()

get_cuda_msg("\n init input")

model.forward(x1,x2)

get_cuda_msg("\n finish forward")

del x1,x2,model

get_cuda_msg("\n delete variable and model")

lib.reset_cuda()

get_cuda_msg("\n lib.reset_cuda()")