I've been reading some recently Python Source code , Found out async This keyword . I checked and found Python Association in .

Xie Cheng , stay GO I used it in , In short , It is a lightweight thread , By the language itself to achieve the scheduling of different processes . Thinking about Python It may be the same thing in . But I Google Search the , The previous descriptions give the following examples :

def consumer():

r = ''

while True:

n = yield r

if not n:

return

print('[CONSUMER] Consuming %s...' % n)

r = '200 OK'

def produce(c):

c.send(None)

n = 0

while n < 5:

n = n + 1

print('[PRODUCER] Producing %s...' % n)

r = c.send(n)

print('[PRODUCER] Consumer return: %s' % r)

c.close()

c = consumer()

produce(c)

This is this. , Is this Xiecheng ? This is just a generator ?

But think about the features of the generator :

It can save the running state and restore it in the next execution , It seems no problem to say that he is Xie Cheng . and , Python The coroutines in run in the same thread , That is, serially executed , So there is no need to lock it .

Look at the generator above , Is there an idea ? This thing is just a generator ? Why is it called Xiecheng ? you 're right , It's the generator .

It says so , Characteristics of the process , You can stop the execution of the current function and save the current state , And restore at the next execution . For a single threaded run , When do I need this operation ? Waiting time . For example, wait for the file to open , Waiting for lock , Wait for the network to return, etc . There is nothing to do when the program is running , You can do other things first , When this side is ready, we will continue to come back to carry out . The following is a simple sleep give an example .

How we use native yield Generator to implement a task queue ? I wrote casually :

import time

def yield_sleep(delay):

start_time = time.time()

while True:

if time.time() - start_time < delay:

yield

else:

break

def hello(name):

print(f"{

name}-1-{

time.strftime('%X')}")

yield from yield_sleep(1)

print(f"{

name}-2-{

time.strftime('%X')}")

# Create tasks

tasks = [hello('one'), hello('two')]

while True:

if len(tasks) <= 0:

break

# Copy the array , You can traverse normally when deleting below

copy_tasks = tasks[:]

for task in copy_tasks:

try:

next(task)

except StopIteration:

# Iteration complete , Remove elements

tasks.remove(task)

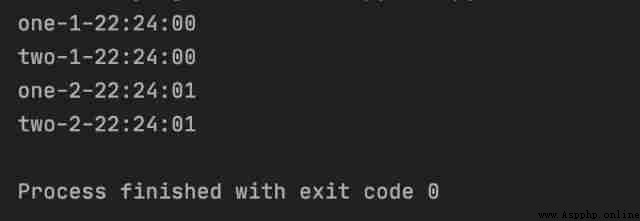

A brief explanation , We call each task yield On temporary return , Carry out task rotation . such , Originally needed 2s Actions performed , All we need is 1s that will do . ( To illustrate the effect , Just a simple implementation . )

Ah , In this way , All support yield Generator language , In fact, they all support coroutine Yeah , For example, I'm older PHP, Hey .

stay Python 3.4 in , Introduced asyncio package . Will be asynchronous IO The operations are encapsulated .

In short , asyncio Inside , A task queue is maintained , When the function executes yield When the executive power is transferred , Switch to the next task to continue . Um. , That's about it .

import asyncio

import time

@asyncio.coroutine

def hello(name):

print(f"{

name}-1-{

time.strftime('%X')}")

yield from asyncio.sleep(1)

print(f"{

name}-2-{

time.strftime('%X')}")

# Get event queue

loop = asyncio.get_event_loop()

# Concurrent execution of tasks

tasks = [hello('one'), hello('two')]

loop.run_until_complete(asyncio.wait(tasks))

loop.close()

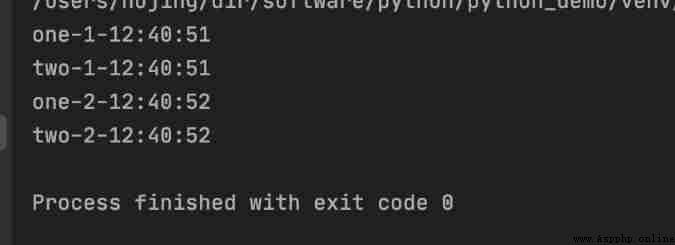

You can see , The function is first executed to yield when , Made a break and gave up the right to execute .

asyncio.get_event_loop() Method , Generate an event queue loop.run_until_complete Method to run the specified task queue asyncio.sleep Function and the one we implemented above yield_sleep It's the same effect . stay yield When interrupted , Another task will be found in the queue and executed One thing to pay attention to , Python It is necessary to hand over the right to execute the coordination process . With this Go Different . in other words , The timing of CO process switching is :

for instance ( Simply modify the above example ):

import asyncio

import time

@asyncio.coroutine

def hello(name, delay, not_yield):

print(f"{

name}-1-{

time.strftime('%X')}")

# Occupy the coordination process and wait

if not_yield:

time.sleep(delay)

else:

yield from asyncio.sleep(1)

print(f"{

name}-2-{

time.strftime('%X')}")

# Get event queue

loop = asyncio.get_event_loop()

# Concurrent execution of tasks

tasks = [hello('one', 2, False), hello('two', 5, True)]

loop.run_until_complete(asyncio.wait(tasks))

loop.close()

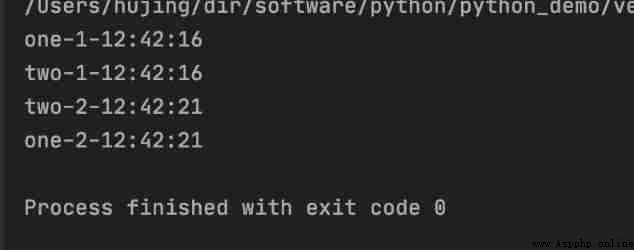

You can see , Although the coordination process 1 Just want to wait 1s, But because of the synergy 2 He has been occupying the executive power without releasing it , So synergetic process 1 Wait until the process 2 Only after the execution is completed can the right of execution be obtained again , Both 5s after

stay Python 3.5 in , Added async/await Grammatical sugar .

Simply speaking , The above @asyncio.coroutine Switch to async, take yield from Switch to await That's it . The other is constant . After replacement , The code above becomes like this , It means the same .

import asyncio

import time

async def hello(name):

print(f"{

name}-1-{

time.strftime('%X')}")

await asyncio.sleep(1)

print(f"{

name}-2-{

time.strftime('%X')}")

# Get event queue

loop = asyncio.get_event_loop()

# Concurrent execution of tasks

tasks = [hello('one'), hello('two')]

loop.run_until_complete(asyncio.wait(tasks))

loop.close()

The way mentioned above is to briefly introduce the implementation , Assist in Python The more common ways of using in :

import asyncio

import time

async def hello(name):

print(f"{

name}-1-{

time.strftime('%X')}")

await asyncio.sleep(1)

print(f"{

name}-2-{

time.strftime('%X')}")

async def main():

""" Create a task and execute """

# programme 1: Add and execute tasks

task1 = asyncio.create_task(hello('one'))

task2 = asyncio.create_task(hello('tow'))

# call `asyncio.create_task` After method call , The task already exists in the scheduling queue

# Even without manual `await` wait for , It will also be executed during CO process switching . We add `await` Just waiting for all the tasks to be completed

await task1

await task2

# Wait for the execution of all the cooperation processes , Separate from await identical

await asyncio.wait({

task1, task2})

# Wait for the cooperation process to execute . If the execution is not completed after the specified time , An exception will be thrown

await asyncio.wait_for(task1, 1)

# programme 2: Execute the cooperation process in batch . programme 1 A simplified version of the

# The return value is the set of all coroutines

await asyncio.gather(

hello('one'),

hello('tow')

)

""" Get task information """

# Get the currently executing task

current_task = asyncio.current_task()

# Get all outstanding tasks in the event loop

all_task = asyncio.all_tasks()

# Close a collaboration , No more execution

task1.cancel()

# Get the result of the task . If the process is closed , It throws an exception

ret = task1.result()

asyncio.run(main())

Create and execute a collaboration task , So you don't have to worry about the event queue , We are main All you have to do in the function is create a task and execute .

Be careful , Use keywords async Method of definition , It's a partner , Cannot simply call hello('1234'), Its implementation is a decorator , Back to a coroutine object .

about Python Association in , That's about it . In short , Is to carry out IO Time consuming operations , Temporarily cede the right of execution , With better execution efficiency of activities .

But the problem is , For this time-consuming operation , We can't do it all by ourselves every time . Don't panic , In fact, many asynchronous operations , Have been implemented , concrete : https://github.com/aio-libs, List most of the libraries that have implemented asynchronous operations . Yes, of course , System asyncio There are also some simple asynchronous operation implementations in the library .

When writing time-consuming operations later , You can use the cooperative process , Like crawlers. . When a crawler makes a request , It needs to wait for return , At the same time n A request , Can greatly improve the efficiency of the reptile .