Let's study together urllib Customization of request object in .

UA(user agent) The Chinese name is user agent , It's a special string header , Enables the server to identify the operating system and version used by the client ,cpu type , Browsers and versions , Browser kernel , Browser rendering engine , Browser language , Browser plug-ins, etc .

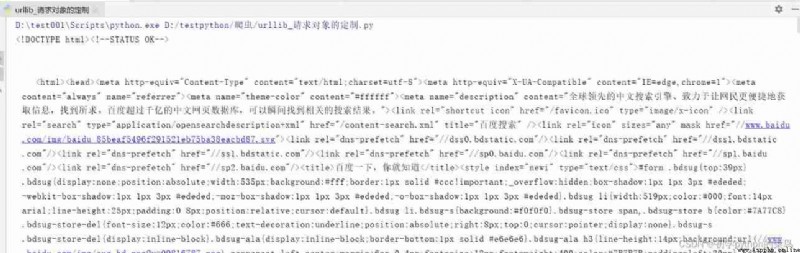

# About how to find... In the browser UA. As shown in the figure below !

urlopen() Method can realize the most basic request initiation , But if you want to join Headers Etc , You can use it Request Class to construct the request .

The grammar is as follows :

urllib.request.Request(url, data=None, headers={})

Be careful : Because of the problem of parameter order , Don't write directly url,headers, In the middle data, If written directly , be headers The value of is passed to by default data, So we need to use keywords to pass parameters !

Let's take an example ( Read Baidu home page source code ):

import urllib.request

url = 'https://www.baidu.com'

# url The composition of eg:https://www.baidu.com/s?wd= Jackson Yi

# 1. agreement (http/https) 2. host (www.baidu.com) 3. Port number (80/443) 4. route (s) 5. Parameters (wd= Jackson Yi ) 6. Anchor point

# Common port numbers

# http(80) https(443) mysql(3306) oracle(1521) redis(6379) mongodb(27017)

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36'

}

# Because of the problem of parameter order , Don't write directly url,headers, In the middle data, If written directly , be headers The value of is passed to by default data, So we need to use keywords to pass parameters !

request = urllib.request.Request(url = url,headers = headers)

response = urllib.request.urlopen(request)

content = response.read().decode('utf8')

print(content) # If not ua identification , Then only part of the returned data . Because there is an anti climbing strategy

Running results :

That's all python Customization of request object in crawler , That is, the anti climbing strategy ! Pay attention to me , In the next issue, we will update some questions about encoding and decoding !!

Python implementation of PSO particle swarm optimization support vector machine regression model (SVR algorithm) project practice

Python implementation of PSO particle swarm optimization support vector machine regression model (SVR algorithm) project practice

explain : This is a practical

Python - matplot plot plot multi graph histogram and line graph coexist and share the X axis

Python - matplot plot plot multi graph histogram and line graph coexist and share the X axis

introduction Previous article