Remember those days when we had a black night together in Internet cafes ?《 Hero alliance 》 It's definitely a popular game in College , Even if college students who have graduated for many years get together , It's hard not to miss playing together at that time 《 Hero alliance 》 The day of .

Today, I will share with you the hero and the crawler with skin pictures .

I went first 《 Hero alliance 》 Find the website of heroes and skin pictures on the official website :

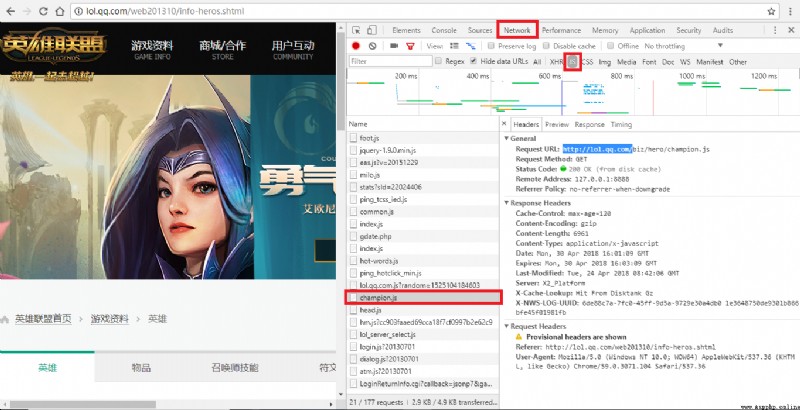

URL = r'https://lol.qq.com/data/info-heros.shtml'You can see from the website above that all heroes are , Press down F12 View the source code , It is found that the hero and skin pictures are not directly given , But hidden in JS In file . It needs to be turned on at this time Network, find js window , Refresh web page , Just see one champion.js The option to , Click to see a dictionary —— It contains the names of all heroes ( english ) And the corresponding number ( Here's the picture ).

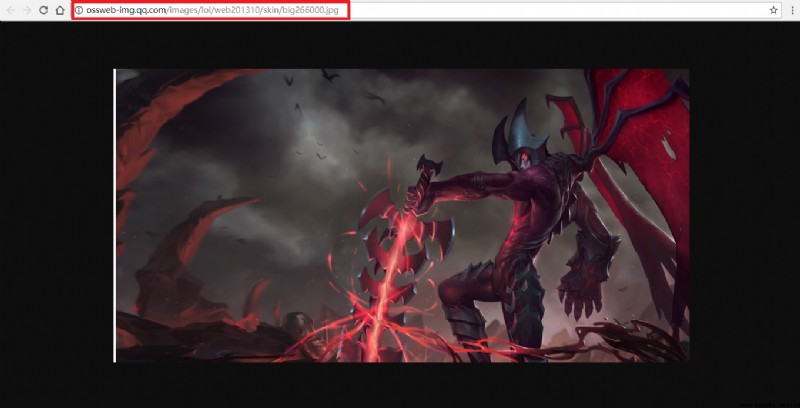

But only the hero's name ( english ) And the corresponding number can't find the picture address , So I went back to the web page , Just click on a hero , Jump to the page and find the pictures of heroes and skin in , But to download, you need to find the original address , This is the right-click selection “ Open in a new tab ”, The new web page is the original address of the picture ( Here's the picture ).

The red box in the picture is the picture address we need , After analysis, we know : The address of each hero and skin is only different in number (http://ossweb-img.qq.com/images/lol/web201310/skin/big266000.jpg), And the number has 6 position , front 3 One represents a hero , The last three represent skin . Just found js There happens to be a hero's number in the file , The skin code can be defined by itself , Anyway, the skin of each hero is no more than 20 individual , And then it can be combined .

You can start to write the program after you figure out the picture address :

First step : obtain js Dictionaries

def path_js(url_js):

res_js = requests.get(url_js, verify = False).content

html_js = res_js.decode("gbk")

pat_js = r'"keys":(.*?),"data"'

enc = re.compile(pat_js)

list_js = enc.findall(html_js)

dict_js = eval(list_js[0])

return dict_jsThe second step : from js Extract from the dictionary key Value generation url list

def path_url(dict_js):

pic_list = []

for key in dict_js:

for i in range(20):

xuhao = str(i)

if len(xuhao) == 1:

num_houxu = "00" + xuhao

elif len(xuhao) == 2:

num_houxu = "0" + xuhao

numStr = key+num_houxu

url = r'http://ossweb-img.qq.com/images/lol/web201310/skin/big'+numStr+'.jpg'

pic_list.append(url)

print(pic_list)

return pic_listThe third step : from js Extract from the dictionary value Value generation name list

def name_pic(dict_js, path):

list_filePath = []

for name in dict_js.values():

for i in range(20):

file_path = path + name + str(i) + '.jpg'

list_filePath.append(file_path)

return list_filePathStep four : Download and save data

def writing(url_list, list_filePath):

try:

for i in range(len(url_list)):

res = requests.get(url_list[i], verify = False).content

with open(list_filePath[i], "wb") as f:

f.write(res)

except Exception as e:

print(" Error downloading picture ,%s" %(e))

return FalseExecute main program :

if __name__ == '__main__':

url_js = r'http://lol.qq.com/biz/hero/champion.js'

path = r'./data/' # Folder where pictures exist

dict_js = path_js(url_js)

url_list = path_url(dict_js)

list_filePath = name_pic(dict_js, path)

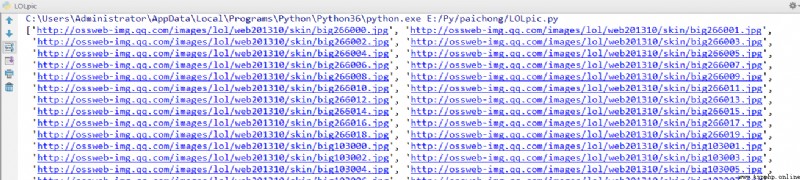

writing(url_list, list_filePath)After running, the website address of each picture will be printed on the console :

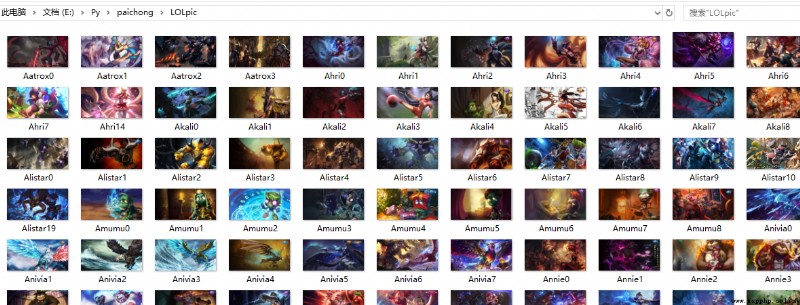

In the folder, you can see that the pictures have been downloaded :

That's what I share , If there are any deficiencies, please point out , More communication , thank you !

Complete code , Please click on download