With the development of artificial intelligence in order to solve the challenging problems,People created the more complex、more opaque models.AI就像一個黑匣子,can make their own decisions,But people don't know why.建立一個AI模型,輸入數據,然後再輸出結果,But there's a problem that we can't explainAIWhy come to this conclusion.需要了解AIHow to come to the reason behind a conclusion,Rather than accept a without context or explain the output results.

Interpretability aims to help people understand:

在本文中,我將介紹6for interpretabilityPython框架.

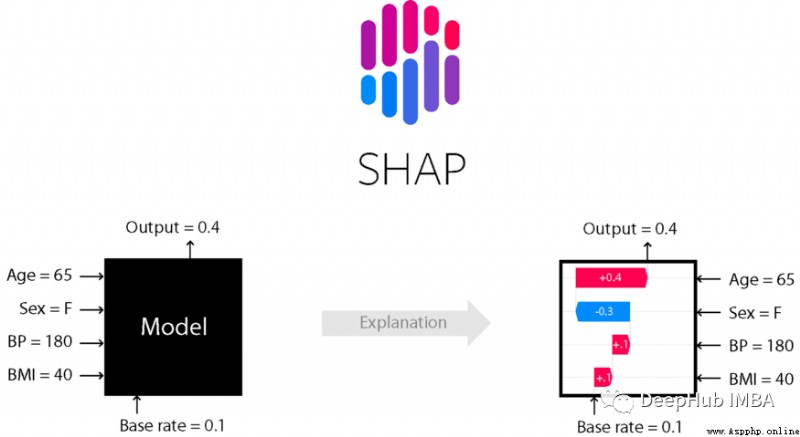

SHapley Additive explanation (SHapley Additive explanation)is a game-theoretic approach to interpreting the output of any machine learning model.It utilizes the classics in game theoryShapleyValue is the optimal credit allocation and related extension will with partial explanation(See the paper for details and citations).

The contribution of each feature in the dataset to the model prediction is given byShapley值解釋.Lundberg和Lee的SHAP算法最初發表於2017年,This algorithm is widely adopted by the community in many different fields.

使用pip或conda安裝shap庫.

# install with pip

pip install shap

# install with conda

conda install -c conda-forge shap

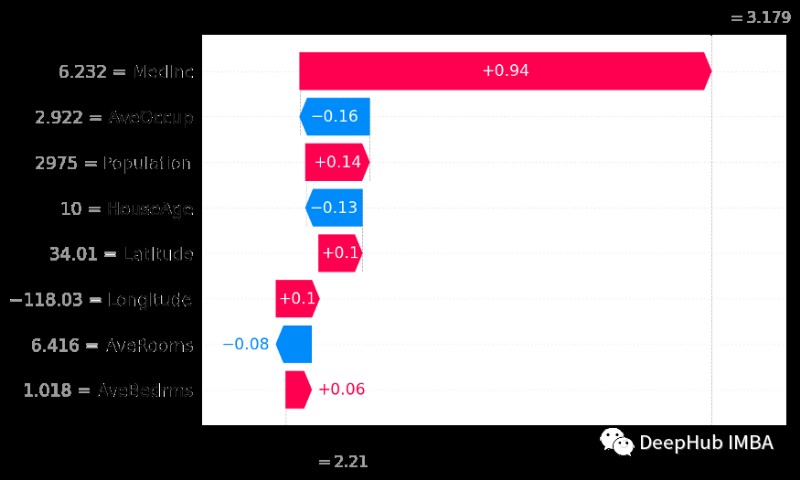

使用ShapLibraries to build waterfall charts

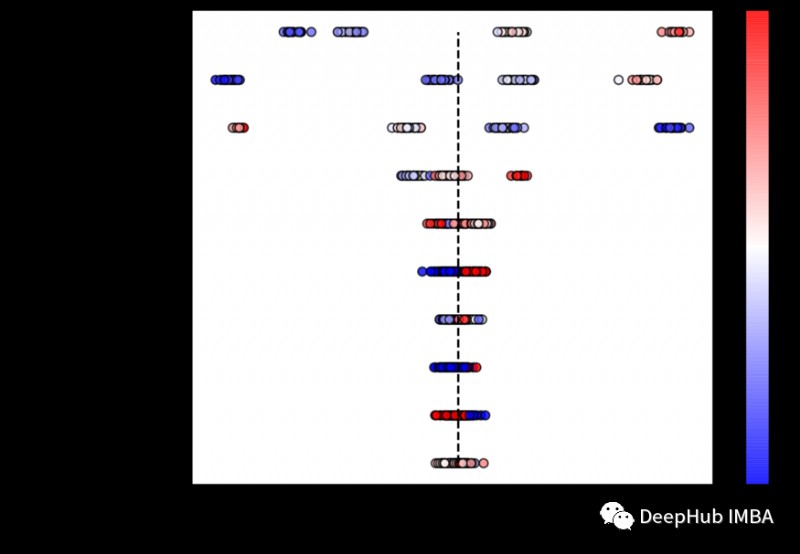

使用Shap庫構建Beeswarm圖

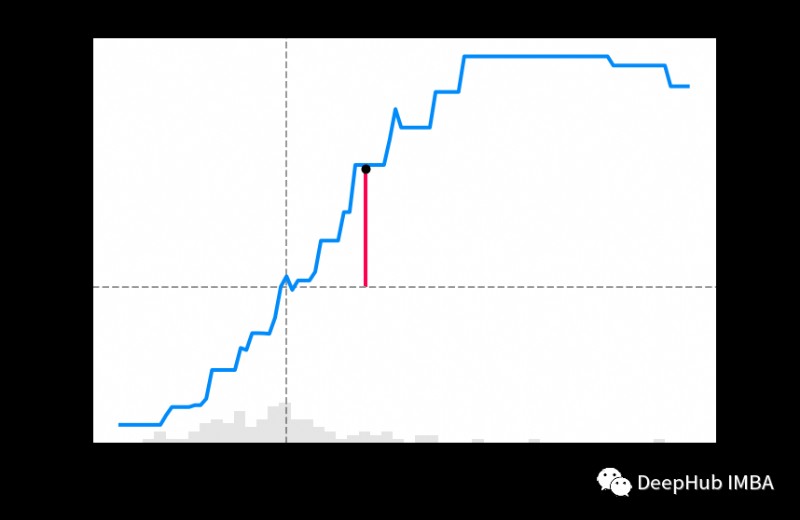

使用ShapLibrary build partial dependency graph

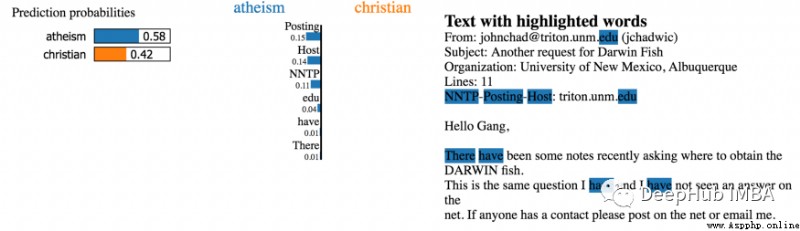

in the field of interpretability,One of the earliest known methods wasLIME.It can help explain what machine learning models are learning and why they predict a certain way.LimeData for tables is currently supported,Explanation of Text Classifiers and Image Classifiers.

Know why model in this way, it is important to adjust algorithm to predict.借助LIME的解釋,Be able to understand why the model behaves the way.If the model does not run as planned,then it is very likely that a mistake was made during the data preparation phase.

使用pip安裝

pip install lime

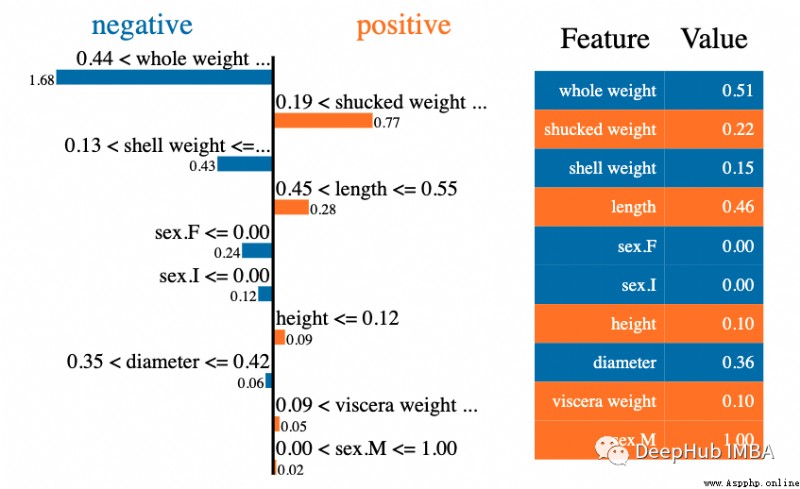

LIME Constructed Partial Interpretation Diagram

LIME構建的Beeswarm 圖

“ ShapashIs a machine learning to explain and everyone can understandPython庫.ShapashSeveral types of visualizations are provided,Shows clear labels that everyone can understand.Data scientists can more easily understand and share the results of their models.End users can use the most standard summaries to understand how the model made its judgments.”

To express data with stories、Insights and Model Discovery,Interactivity and beautiful charts are essential.Business and data scientists/分析師向AI/MLThe best way to present and interact with results is to visualize them and place them inweb中.ShapashLibraries can generate interactive dashboards,and collected many visualization charts.and shape/Lime Interpretation Related.它可以使用SHAP/Lime作為後端,That is to say, he only provides better-looking graphs.

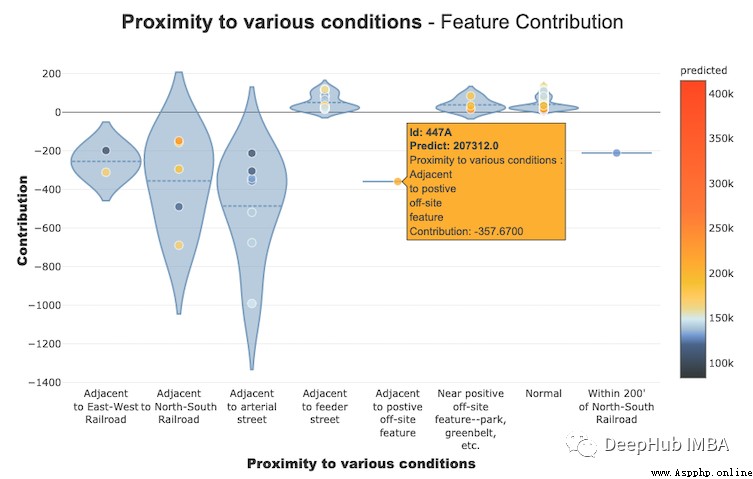

使用ShapashBuild a feature contribution map

使用ShapashLibrary to create interactive dashboard

使用ShapashConstructed Partial Interpretation Diagram

InterpretML是一個開源的Python包,It provides researchers with machine learning interpretability algorithms.InterpretMLSupport for training interpretable models(glassbox),And explain the existingML管道(blackbox).

InterpretMLDemonstrates two types of interpretability:glassbox模型——Machine Learning Models Designed for Interpretability(如:線性模型、規則列表、廣義可加模型)and black box interpretability techniques——for explaining existing systems(如:部分依賴,LIME).使用統一的APIAnd packaging a variety of methods,擁有內置的、Extensible visualization platform,This package enables researchers to easily compare interpretability algorithms.InterpretML還包括了explanation Boosting Machine的第一個實現,這是一個強大的、可解釋的、glassbox模型,Can be as accurate as many black box models.

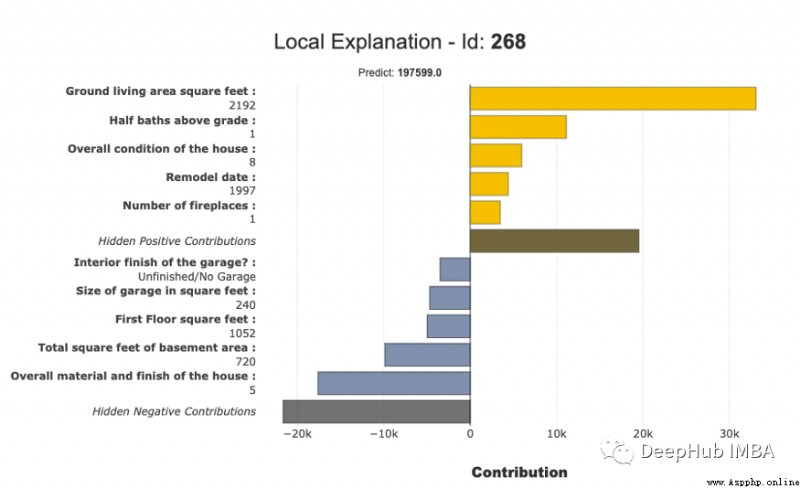

使用InterpretMLTo build local explain interactive figure

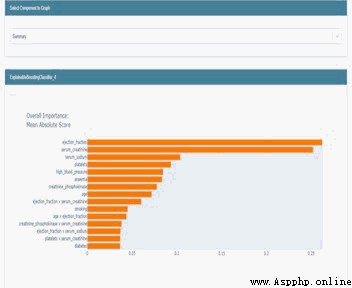

使用InterpretMLConstructed Global Interpretation Graph

ELI5is a tool that can help debug machine learning classifiers and interpret their predictionsPython庫.The following machine learning frameworks are currently supported:

ELI5There are two main ways to interpret a classification or regression model:

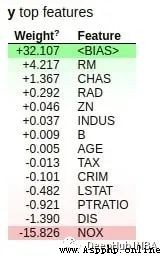

使用ELI5Library generates global weights

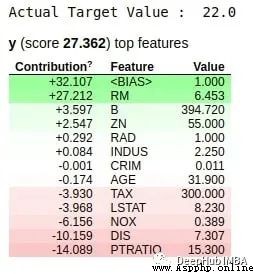

使用ELI5library to generate local weights

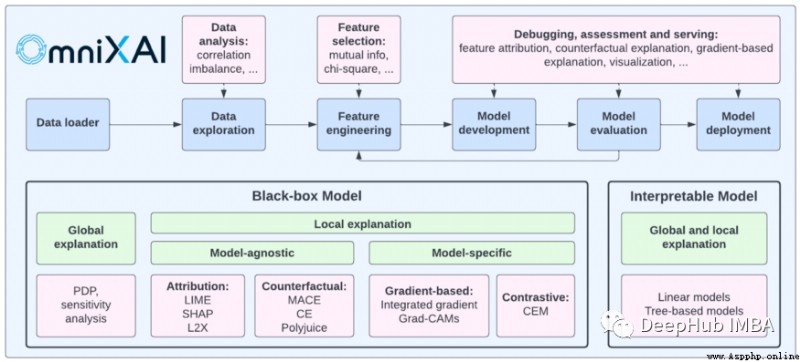

OmniXAI (Omni explained AI的簡稱),是SalesforceThe recent development and open sourcePython庫.It provides comprehensive interpretable artificial intelligence and interpretable machine learning capabilities to solve several problems that need to be judged in the production of machine learning models in practice.對於需要在MLInterpret various types of data at various stages of the process、Data Scientists for Models and Interpretation Techniques、ML研究人員,OmniXAIHope to provide a one-stop comprehensive library,That can be explainedAI變得簡單.

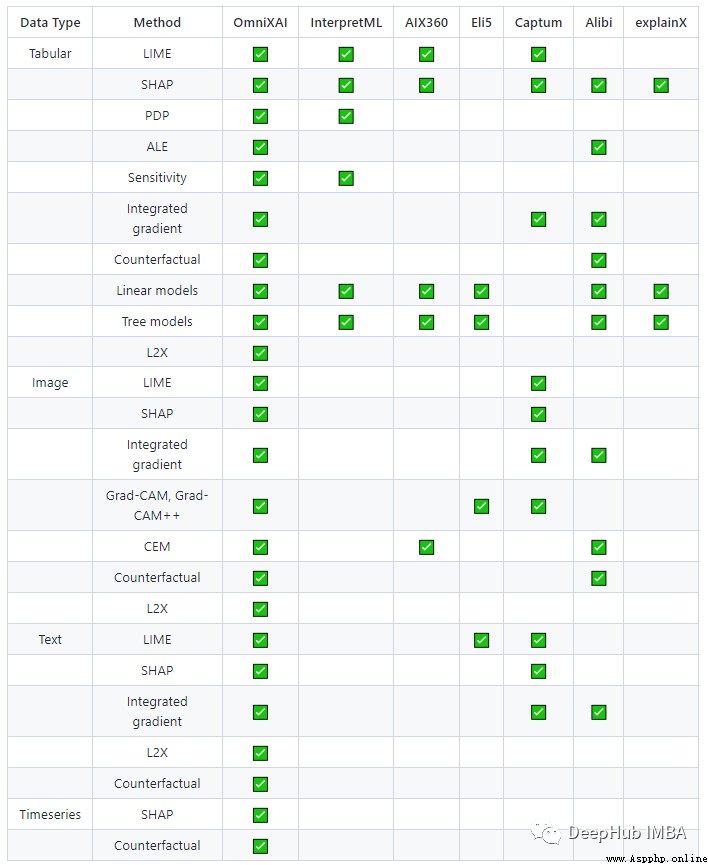

以下是OmniXAIProvided comparison with other similar libraries

https://avoid.overfit.cn/post/1d08a70ed36a41a481d9f2a66b01971a

作者:Moez Ali