scrapy Related components pip install scrapy -i https://pypi.tuna.tsinghua.edu.cn/simple

If in windows Under the system , Prompt this error ModuleNotFoundError: No module named 'win32api', Then use the following command to solve :pip install pypiwin32.

You must use the command line to create , because

pycharm Cannot create by default scrapy project ;

scrapy startproject [ Project name ]

Examples are as follows :

(yunweijia) PS C:\Users\22768\yunweijia\Scripts\scrapy> scrapy startproject mode_1

New Scrapy project 'mode_1', using template directory 'C:\Users\22768\yunweijia\lib\site-packages\scrapy\templates\project', created in:

C:\Users\22768\yunweijia\Scripts\scrapy\mode_1

You can start your first spider with:

cd mode_1

scrapy genspider example example.com

(yunweijia) PS C:\Users\22768\yunweijia\Scripts\scrapy>

command :

scrapy genspider Reptile name domain name

Examples are as follows :

(yunweijia) PS C:\Users\22768\yunweijia\Scripts\scrapy\mode_1> scrapy genspider example example.com

Created spider 'example' using template 'basic' in module:

mode_1.spiders.example

(yunweijia) PS C:\Users\22768\yunweijia\Scripts\scrapy\mode_1>

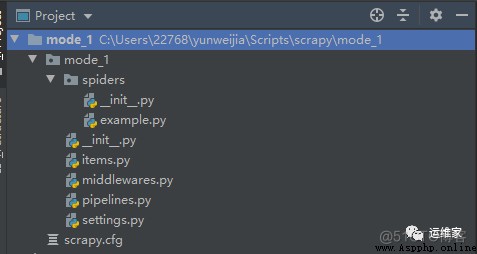

After creation, you can see what files have been created ;

We use pycharm Open it and have a look ;

scrapy The functions of each file in the crawler project are as follows :

items.py: A model for storing data crawled down by a crawler ;middlewares.py: It is used to store files of various middleware ;pipelines.py: Used to put items Model of , Store to local disk ;settings.py: Some configuration information , For example, the request header , Address agent, etc ;scrapy.cfg: The configuration file for the project ;spiders: The crawlers of the project are all in this directory , You can see what we just created example This reptile , It's in this directory ;For more information, go to VX official account “ Operation and maintenance home ” , Get the latest article .

------ “ Operation and maintenance home ” ------

------ “ Operation and maintenance home ” ------

------ “ Operation and maintenance home ” ------

linux Under the system ,mknodlinux,linux Directory write permission , Chinese cabbage can be installed linux Do you ,linux How the system creates files , Led the g linux How to install software in the system ,linux Text positioning ;

ocr distinguish linux,linux Anchoring suffix ,linux System usage records ,u Dish has linux Image file , Fresh students will not Linux,linux kernel 64 position ,linux Self starting management service ;

linux Calculate folder size ,linux What are the equipment names ,linux Can I use a virtual machine ,linux The system cannot enter the command line , How to create kalilinux,linux Follow so Are the documents the same .